So your site has too much JavaScript. No problem, say the experts, just lazy load your application! Well, I am here to tell you that lazy-loading is harder than you think!

Let's start with building a simple app to demonstrate the problem. We will have three components:

- A component for state

- A component for the interaction

- And a component for displaying user data.

For this simple example, we are about to show you the breakdown is overkill, but we designed the simple application in this way to demonstrate the problem of real-world applications better.

Note: We use React in these examples because of its concise syntax and popularity. But the issues described here are in no way specific to React. All Hydration based frameworks face these issues. So please don't take this as a negative reflection on React specifically.

File: main.tsx

export function MyApp() {

const [count, setCount] = useState(0);

return <>

<Display count={count} />

{count > 10 ? <OverSize /> : null}

<Incrementor count={count} setCount={setCount} />

</>;

}

function Display({count}) {

return <div>{count}</count>

}

function OverSize() {

return <span>Count is too big!</span>

}

function Incrementor({count, setCount}) {

return <button onClick={() => setCount(count+1)}>+1</button>

}

render(<MyApp/>, document.getElementyById('my-app'));

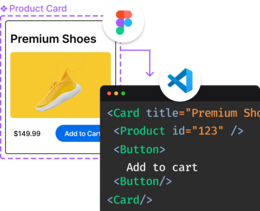

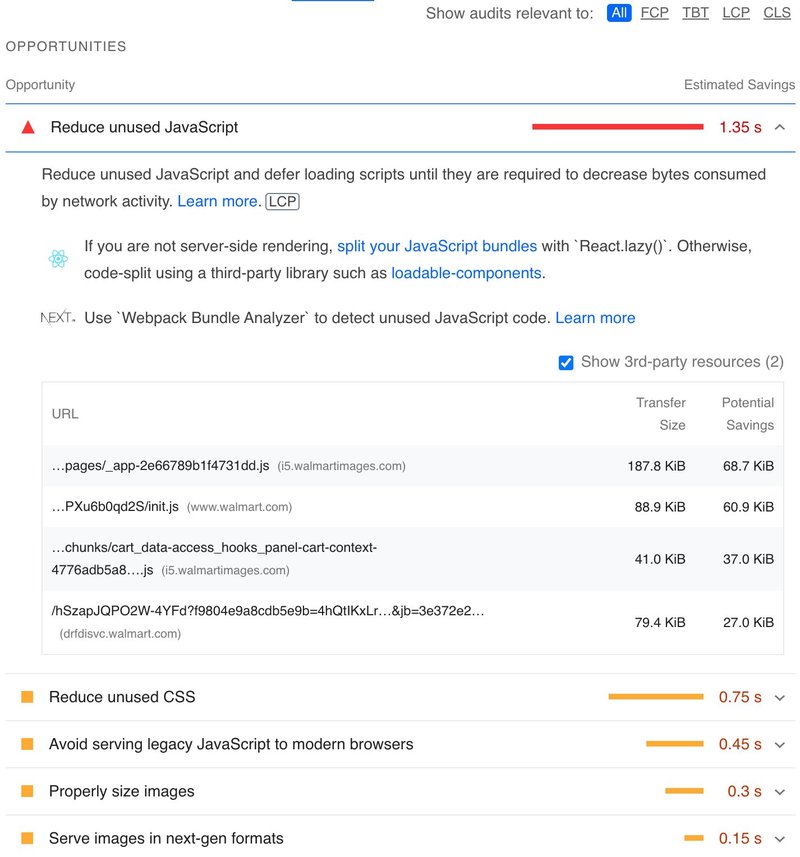

For brevity, the application is small. But let's imagine that there are many sub-components behind each of our components and those sub-components have a lot of JavaScript. So our application is large and therefore slow to start. We consulted PageSpeed, and their primary recommendation was to reduce the amount of JavaScript.

File: main.tsx

import { useState, lazy, Suspense } from 'react';

const Display = lazy(async () =>

await (import('./display')).Display);

const Incrementor = lazy(async () =>

await (import('./incrementor')).Incrementor);

const OverSize = lazy(async () =>

await (import('./oversize')).OverSize);

export function MyApp() {

const [count, setCount] = useState(0);

return <>

<Suspense><Display count={count} /></Suspense>

{count > 10 ? <Suspense><OverSize /></Suspense> : null}

<Suspense><Incrementor count={count} setCount={setCount} /></Suspense>

</>;

}

createRoot(document.getElementyById('my-app')).render(<MyApp/>);

File: display.tsx

export function Display({count}) {

return <div>{count}</count>

}

File: oversize.tsx

export function OverSize() {

return <span>Count is too big!</span>

}

File: incrementor.tsx

export function Incrementor({count, setCount}) {

return <button onClick={() => setCount(count+1)}>+1</button>

}

The result is straightforward, even though it is quite wordy. We had to create multiple files and give them names. And as we all know, naming is one of the hardest problems in computer science. And this is where the problems start. Our hard work of breaking up the application into separate files will be undermined by Hydration, coalescing, and prefetching problems.

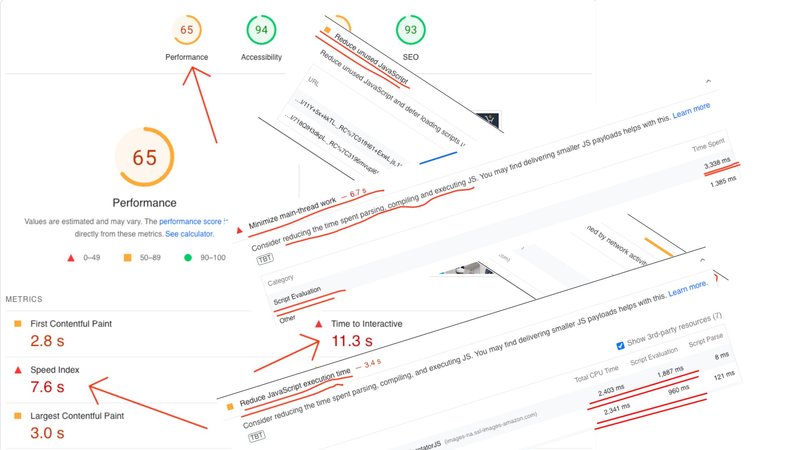

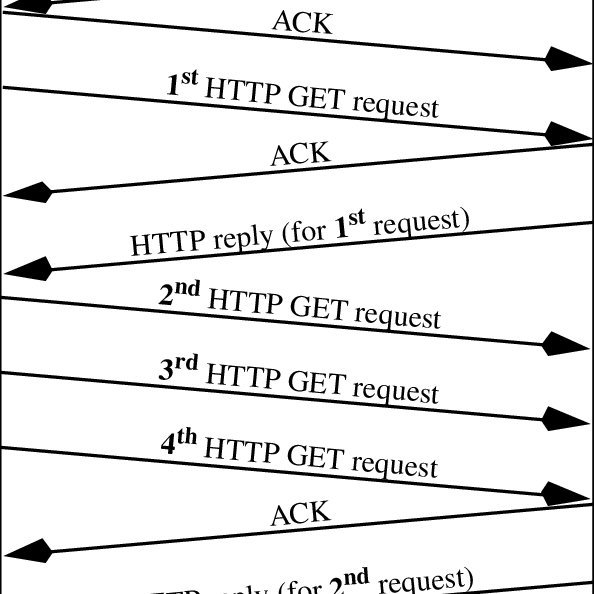

On application, startup Hydration requires that the framework descends into each component to rebuild the component state (useState) and check to see if the component has any listeners that need to be attached for interactivity. The fact that we descend into each component completely undoes the lazy-loading work we put in. Both Display and Incrementor will be fetched eagerly as part of the application startup. Here lazy-loading made the situation arguably worse since the browser will have to make multiple HTTP trips to the server.

The only component where lazy-loading helped is the OverSized component. This is the only component not needed as part of Hydration, so lazy-loading it made sense. The problem is that as a developer, you don't know which set of components your application will need because that is oftentimes dependent on the application state and may change as the application adds new features. So while in the above example, it is easy to reason about which components will be needed eagerly, in a large-scale application, the problem quickly goes beyond what developers can keep track of and requires some sort of automation, which needs to be custom-built.

From the above example, it should be apparent that lazy-loading is only useful for components not currently part of the render tree. That is because the components part of the render tree require Hydration to become interactive, and Hydration requires the eager walking of the component tree. So lazy loading is great for route changes (new components not part of the current render tree) but not so much for lowering the amount of JS which is required for Hydration.

It may very well be that the Display and Incrementor components need to be loaded together. In that case, they should be placed in the same lazy-loaded chunk. Not all bundlers will know how to merge display.tsx and incrementor.tsx into the same file because they are accessed through a separate dynamic import. And even if they know how to merge dynamic imports, it is not immediately clear to the bundler which dynamic imports should be merged and which should not. This is a problem because now the developer needs to make bundling choices about which chunk each symbol should be placed in. Such information may not be apparent at the time of writing the application or may change as the application adds new functionality.

A corollary to the above is that bundling is not an implementation detail of the application but a configuration detail of the bundler. Our bundler systems are not set up for this.

We want to ensure that the user has a smooth experience. That means that we don't want to start fetching a JS chunk on user interaction. Instead, the chunk should be prefetched eagerly. But prefetching is outside of the scope of frameworks, as well as bundlers. Prefetching now falls onto the developer's responsibility. This is tricky because the developer now needs to determine all of the potential entry points chunks, in which order they should be prefetched, and when in the application lifecycle is a good time to do the prefetching. For example, is chunk A needed now or only on route change? How does the developer communicate that information to the pre-fetcher? What mechanisms should be used for prefetching? And many more questions which now fall on the developer's responsibility.

As written, our code can only lazy-load components. Components include event handlers, but because components are often loaded eagerly due to Hydration, we often lazy-load behavior before it is needed. Loading the component behavior eagerly can be seen on PageSpeed as “Reduce unused JavaScript” code coverage (event handlers are downloaded but not executed as part of hydration.). It is the code which downloaded but was not executed as part of Hydration. The not-executed code is mostly event handlers. So let's try to lazy load the event handler.

We start by breaking up the incrementor.tsx into even smaller files by pulling out the event handler like so:

File: incrementor.tsx

export default function Incrementor({count, setCount}) {

return <button onClick={async () => {

(await import('./incrementor-on-click')).default(setCount, count);

}}>+1</button>

}

File: incrementor-on-click.tsx

export default function incrementorOnClick(setCount, count) {

setCount(count+1)};

}But here we run into more issues regarding hydration trampoline closure; async nature of lazy-loading; prefetching; its a lot of work; (And we have not even gotten into the issue of flowing types across lazy loading boundaries)

The issue is that when Hydration collects all of the listeners, it expects a closure to invoke when the listener fires on user interaction. In our example, the listener-closure closes over setCount and count, and that code for creating the closure needs to be executed eagerly, as shown here for the onClick case.

File: incrementor.tsx

export default function Incrementor({count, setCount}) {

return <button onClick={async () => {

(await import('./incrementor-on-click')).default(setCount, count);

}}>+1</button>

}Another way to look at it is from the handler file.

File: incrementor-on-click.tsx

The above function needs to get a hold of setCount and count to perform its job. Without setCount and count the IncrementorOnClick can't do anything useful. So how does it get a hold of it? Well, it expects that data to be passed in when it is invoked.

This is the reason why the <button onClick={...}/> has an eager closure that captures the setCount and count on Hydration and then makes it available to the lazy-loaded function on invocation.

Another way to think about it is that lazy-loading listeners do not help with startup performance, as we still need to allocate the event handlers (It may help with the amount of JS we download, but not in this case, as the ceremony of lazy loading contains more code than the actual lazy-loaded chunk.).

Lazy-loading is asynchronous by its nature. The issue is that the event handler oftentimes has to synchronously call preventDefault or call other APIs which are only available synchronously. This complicates the writing of code as the developer now needs to take that into account. It may not be possible to just refactor the code for lazy-loading and assume it will work, as doing so introduces lazy-loading boundaries, which may break the application.

Again prefetching is an issue with lazy-loading on interactivity, and all of the issues we discussed above apply here as well. But in the case of interactivity, prefetching is a lot more important because while Hydration causes eager loading of components, interactivity will not eagerly load until the user interacts, and by that point, it may be too late (user will experience slow network delay on the first interaction.) So prefetching of interactivity code is a must!

The amount of work to create lazy interactions is astounding!

- create a new file

- insert dynamic import()

- ensure that the code is prefetched

- repeat for each component/function

Most of the time, the developer will not do all of this extra work for a relatively small win. The issue with event handlers is not that any one event handler adds a lot of code. It is that applications usually have a lot of event handlers, and so it adds up. It’s like death by a thousand cuts.

Application startup performance is tied to the amount of JS that the browser needs to download and execute. Lazy-loading code is often suggested as the solution to improve startup performance. While this may sound straightforward at first, it is much harder than you think.

Lazy-loading adds extra ceremonies to your code and workflow. It requires the developer to think about how the application should be broken up. It creates new problems around prefetching of code, which is not solved out of the box and requires more engineering.

And finally, a lot of benefits of lazy-loading is destroyed by the eager nature of Hydration, and so most benefits of lazy-loading can only be achieved with components that are not currently in the render tree (components that need to be loaded on route-change.) It’s easy to say that lazy loading will solve your performance problems, but the actual engineering part of the developer is a lot more than it may seem. For this reason, it rarely gets done. When an application grows too large and lazy-loading becomes necessary, the refactoring process may be extremely difficult.

Qwik is a framework that eliminates the need for developers to manually handle lazy-loading, bundling, and prefetching. It functions seamlessly out of the box. Furthermore, Qwik does not undo the advantages of Hydration for components in the render tree, as it does not perform Hydration.

Introducing Visual Copilot: convert Figma designs to high quality code in a single click.