The best AI demos don’t just answer questions; they take actions.

When you see AI sucessfully doing complex things—building out entire dashboards, debugging code iteratively with linters (like the assistants in Cursor or Windsurf)—it feels a bit magical. You can almost imagine one giant brain pulling the strings, sci-fi style.

But peel back the curtain, and you'll find the reality is less about a single super-intelligence and more about orchestrating a bunch of smaller, scoped AI agents.

Getting good results hinges on systems design: task decomposition, specialist routing, context management, and reliable communication. If that sounds like architecting microservices while your PM breathlessly asks, “Can’t we just use a monolith?”, then congrats—you’ve already got the prereqs for the AI revolution.

So, let’s explore the concepts and patterns behind agentic systems, looking at the skills you’ve picked up as a web developer and how they’re key for building the next wave of AI tools.

Okay, we know systems are the key. But what actually turns a bunch of individual agents into a system that works effectively together?

It's more than just running them side-by-side. It boils down to how they're structured, how they talk to each other, and how they coordinate on a shared goal.

And if you build for the web, these challenges probably sound familiar. Designing clear interfaces, managing shared state, ensuring reliable communication between components—all these core ideas overlap with agentic AI.

Let's break down the main ingredients.

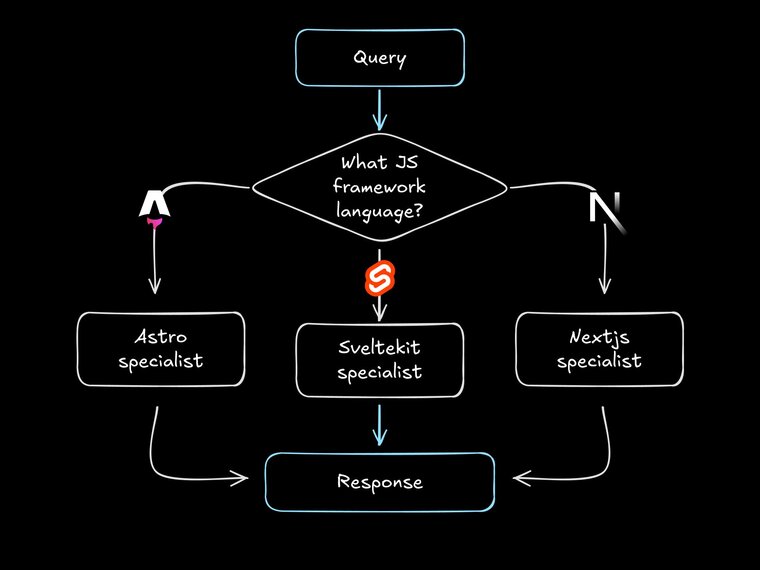

Think microservices, but for AI tasks. Instead of trying to build one giant, generalist agent that inevitably gets confused or masters none of its many jobs, effective systems break the work down. They rely on a team of smaller, specialized agents.

Each agent is optimized for a specific sub-task—maybe one is great at writing SQL, another at summarizing text, and a third handles API calls to a specific service. By focusing on doing one thing well, each agent becomes simpler, more reliable, and easier to test and maintain.

In the above diagram, for instance, each specialist can be debugged and trained separately, focusing only on its domain knowledge, while the system as a whole gets better at answering general JS framework queries.

The cool part about this? We don’t have to wait for OpenAI to code up God. We can think about what existing LLMs and agents are capable of and combine them for higher-level functionality.

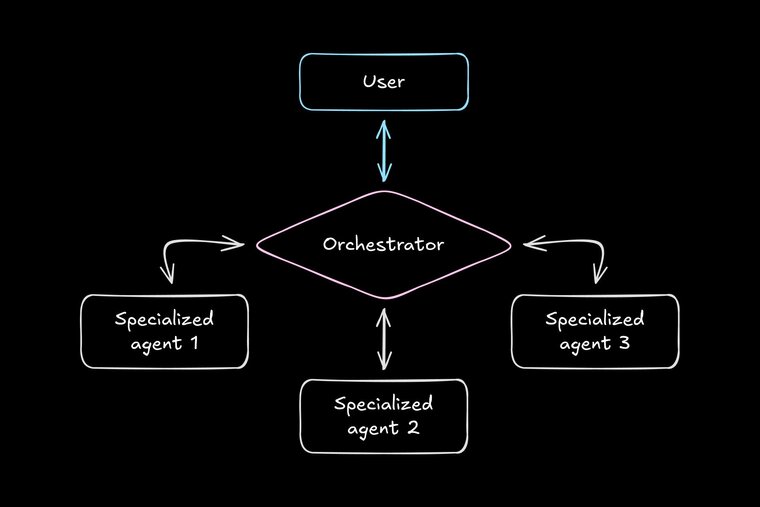

Okay, you have a team of specialist agents. How do they actually work together on a goal? That's the job of the orchestration layer. Think of it as the project manager, the API gateway, or the main controller for your agent crew.

This layer—which might be a sophisticated agent itself or just a set of coded rules—handles the high-level strategy and coordination. It breaks down the main goal into smaller steps (goal decomposition), figures out which specialist gets which step (task assignment), keeps an eye on progress, and adjusts the plan if things go sideways (re-planning & adaptation).

Nailing this orchestration logic is often the trickiest part of building an effective agentic system—it's the “secret sauce” that makes everything click.

Agents working in isolation quickly become useless. If they can't share information or understand the bigger picture, they can’t collaborate effectively. How does the TestingAgent know what the CodingAgent just built? How does the DeploymentAgent know the tests passed?

The solution is shared context and memory. This is the system's collective brain, implemented using a database, a vector store, a message queue, or even just structured data passed between agents—whatever lets relevant agents access the information they need, when they need it.

Choosing how to implement this shared context involves the same kind of state management trade-offs (consistency, performance, complexity) you face when picking between Redux, Zustand, Context API, or server-side state in React web dev.

It's a familiar challenge in a new domain. We’ve all been knee-deep in a Jira ticket where the only relevant context is 17 clicks deep, beneath a GIF of a screaming capybara. Context is where agentic systems can magically work or instantly fall apart.

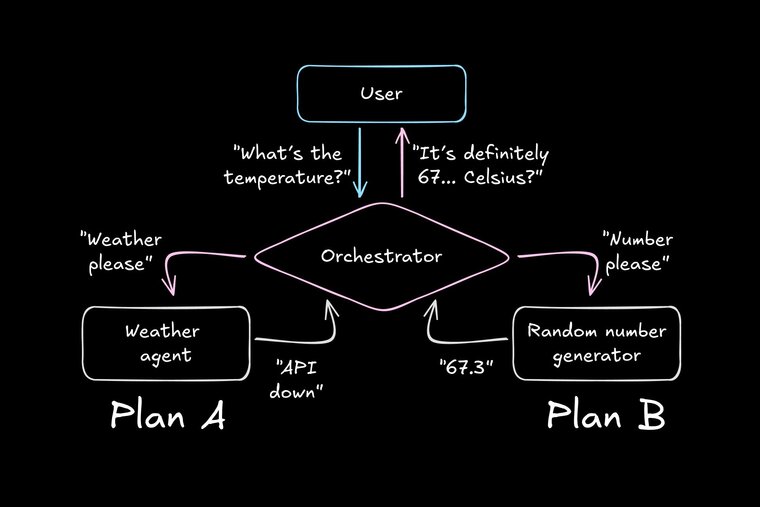

What happens when a specialist agent hits a wall—a downed API, weird data, or just plain confusion? If each agent only knows its tiny part of the world, the whole process could grind to a halt.

This is where the system's higher-level view—usually orchestrated by that "project manager" layer—becomes crucial for resilience. Think of it like having good error handling or a circuit breaker pattern for your microservices; if one agent fails, the system doesn't give up.

Instead, the orchestrator, keeping its "eye" on the main goal, can adapt: retry the task, route it to a different agent or find a new workflow . The individual agent might just report "Nope, couldn't do it," but the system as a whole can be smarter, finding another path to get the job done.

Of course, you still have to design an actually good backup plan.

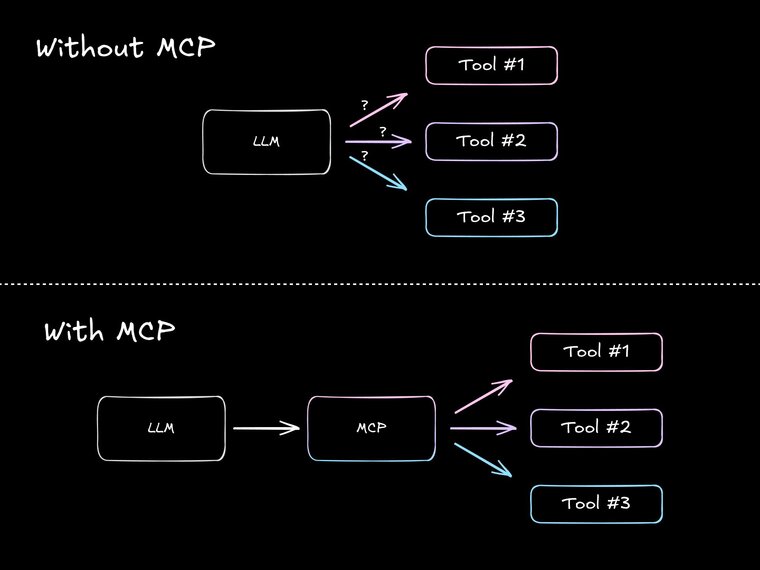

Imagine building a complex web app where every microservice just makes up its own way of talking. It would be chaos; you'd spend all your time building fragile, one-off adapters instead of features.

Agents are no different. To work together smoothly, they need agreed-upon rules of engagement, just like web services need well-defined API contracts (think REST, GraphQL, or gRPC).

Standards, like Anthropic’s Model Context Protocol (MCP), allow you to plug in agents built by different teams or using different LLMs, making the whole setup modular, scalable, and way less brittle.

Without it, agents communicate more like tech bros at Burning Man—lots of enthusiasm, very little coherence.

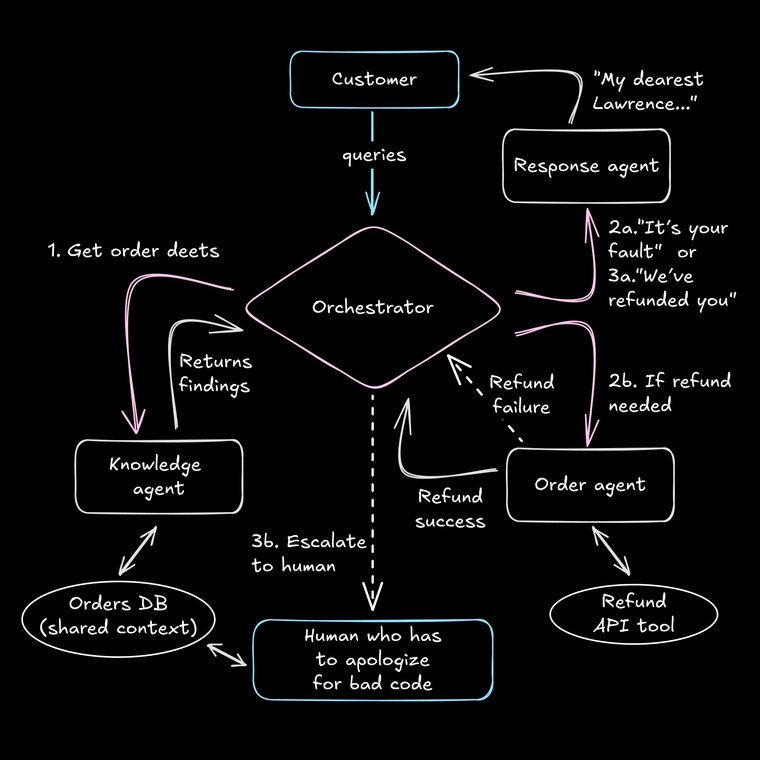

Let's see these concepts in practice with a customer service example.

- Goal: Resolve a customer query.

- Orchestrator: Receives query. Sees it’s order-related. Assigns task to the "Knowledge Agent" via protocol.

- Specialist Agent (Knowledge Agent): Accesses shared context (DB, API tool) for order details. Returns findings to Orchestrator.

- Orchestrator: Monitors progress. If info found, assigns task to “Response Generation Agent.” If unresolved (needs refund), assigns task to “Order Agent.”

- Specialist Agent (Order Agent): Attempts refund via API tool. Fails (needs approval). Reports failure to Orchestrator.

- Orchestrator: Adapts to failure. Escalates by assigning task to the “Human Apology” interface.

- Shared Context Update: The shared customer history is updated throughout. This keeps state consistent for all components.

This example is a bit contrived, since a lot of parts of this process would usually be handled without LLMs. But AI agents can shine when systems have more ambiguity—like interpreting customer needs and conversing naturally.

That highlights a key point of good agentic systems: use deterministic code whenever possible. AI agents are specialized tools that can handle parts of a workflow requiring complex reasoning, interpretation, or interaction.

Agentic systems aren't always static blueprints. Many are designed to learn and adapt. But how does a system made of multiple AI agents actually get smarter?

First up, a design choice: are your agents static or learners?

Static agents prioritize predictability. Their configuration—instructions, prompts, and available tools—remains fixed. While the underlying LLMs mean they aren't perfectly deterministic like traditional code, this stability makes them reliable for essential, well-defined tasks. Think of them as dependable specialists; you generally know what output to expect, often reinforcing this with strict output validation to ensure consistent results for core logic.

Learning agents, on the other hand, are designed to evolve. They adapt their behavior over time based on feedback, new data, or interactions. This makes them powerful for personalization or tackling problems that change, but it also introduces unpredictability. Their responses might shift as they learn, which can be great for improvement but riskier for core system stability.

Often, you'll want a combination: stable "legacy" agents alongside adaptive learners. Static agents are your intensely boring PHP code; learning agents are your experimental Next.js app that breaks every time you sneeze.

The way these systems get smarter boils down to feedback loops and evaluation—basically, testing and monitoring for AI.

Think of evals like unit tests for AI outputs. You define success—maybe it's schema validation, passing an algorithm, or even having another LLM act as a judge. When an agent passes a test, great.

When it fails an eval, though, the system can trigger adjustments, tweaking things via Reinforcement Learning (RL) based on success rates or refining skills with supervised learning on labelled data.

Whatever the method, the key is building in this testability: evals provide the signal, and feedback loops drive the improvement.

Allowing system components to learn introduces a challenge. How do you keep things stable when parts are constantly changing? Just like updates in complex production environments, one agent's changes might break another agent's workflow.

Unique to AI agents, there's also the risk of "catastrophic forgetting"—where learning a new skill makes an agent forget old ones. (Kinda like how vibe coding has destroyed my ability to write array methods.)

Managing this balance is critical, and you often need careful agent versioning and controlled rollouts (think A/B testing, feature flags, etc. or canary releases), plus constant monitoring. It’s DevOps meets non-determinism, but not in the fun way.

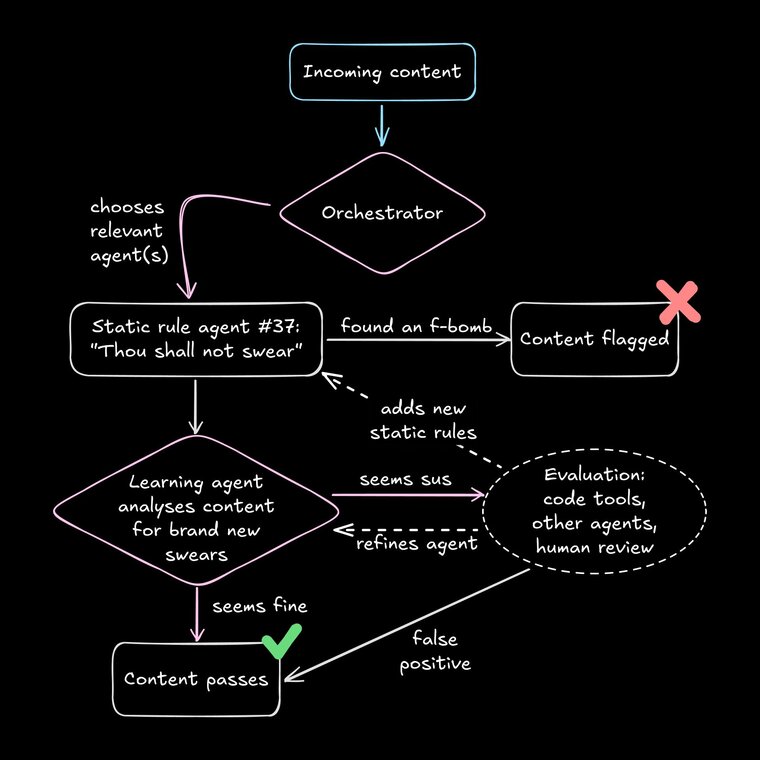

Consider a content moderation system. Static agents can block known bad keywords, and learning agents can identify new types of harmful content.

Evals (automated checks, LLMs, human review) assess accuracy. A feedback loop refines learning agents or flags the need for new static rules.

The orchestrator balances speed vs. accuracy, and carefully balancing static and learning agents helps the system avoid catastrophic forgetting. Careful versioning and rollouts keep the learning agents from breaking things unexpectedly.

Alright, we know the building blocks. But how do you actually wire these agents together into something useful?

Just like designing backend systems, you need the right architecture. Let's peek at two common ways to structure your agent crew.

Think classic top-down management, like a traditional org chart or maybe your typical API gateway setup. You've got a central "manager" agent—the orchestrator—calling all the shots.

It decomposes the main goal and breaks it into sub-tasks, delegating to specialized “worker” agents below it. All communication is strictly up and down the chain: tasks go down, results come back up to the manager.

This pattern is basically like a main controller function calling a sequence of helper functions.

When it shines: This pattern suits well-defined, sequential workflows, where the steps are pretty predictable, like following a recipe or a standard operating procedure. Great when you need tight control.

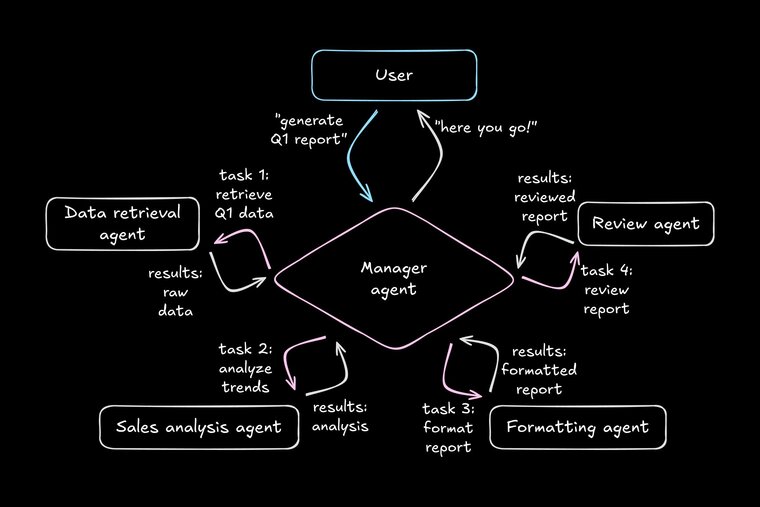

Imagine the boss agent gets a request: “Generate Q1 Sales Report.” Here’s the hierarchy in action:

- Orchestrator: Receives request "Generate Q1 Sales Report." Decomposes task: retrieve data, analyze, format, review.

- Delegation 1: Assigns "Retrieve Q1 data" to

DataRetrievalAgent. Worker 1 (DataRetrievalAgent):Queries DBs/APIs. Returns structured data to Orchestrator.- Delegation 2: Orchestrator receives data. Assigns "Analyze sales trends" (passing data) to

AnalysisAgent. Worker 2 (AnalysisAgent):Performs calculations, identifies insights. Returns analysis to Orchestrator.- Delegation 3: Orchestrator receives analysis. Assigns "Format report" (passing analysis) to

FormattingAgent. Worker 3 (FormattingAgent):Structures report (text, charts). Returns draft to Orchestrator.- Delegation 4: Orchestrator receives draft. Assigns "Review report for compliance" to

ReviewAgent. Worker 4 (ReviewAgent):Reviews report. Returns final draft to Orchestrator.- Final Output: Orchestrator delivers the completed, reviewed report.

Notice the orchestrator is the central hub, directing traffic and handling all the data handoffs. Classic control freak pattern.

Forget the central manager.

In this P2P pattern, agents collaborate more like colleagues throwing ideas on a whiteboard or microservices chattering over a message bus. They can share info, grab tasks, or react to events without waiting for top-down orders.

Think of event-driven architectures or pub/sub systems where agents subscribe to topics they care about.

When it shines: This pattern suits problems where you need lots of parallel processing or want agents reacting dynamically to events. Think brainstorming sessions, complex research gathering from many sources, or systems that need to respond quickly to incoming data streams.

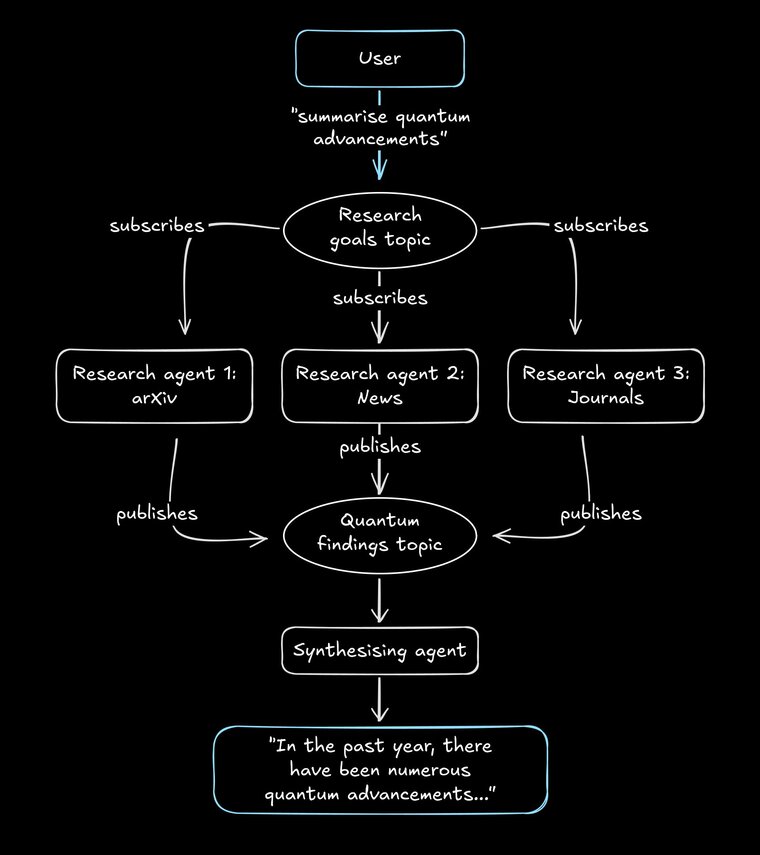

Goal: Summarize recent breakthroughs in quantum computing.

- Goal Dispatch: An initial trigger broadcasts the goal (like publishing to a

ResearchGoalstopic). - Parallel Research: Multiple specialized

ResearchAgents subscribed to the topic grab the goal. One scans arXiv, another parses news sites, and a third checks journals. They all work at the same time. - Information Sharing (P2P): As each agent finds something juicy, it publishes it to a shared topic (e.g.,

QuantumFindings). No need to report back to a manager. - Synthesis: A separate

SynthesizingAgentsubscribes to theQuantumFindingstopic and starts collecting the nuggets as they come in. - Aggregation & Output: The

SynthesizingAgentcollects, maybe stopping after a set time or when the flow of new findings dries up.

Notice the difference? Agents work in parallel, share freely, and the Synthesizer just reacts to the available info. No central bottleneck.

Of course, that’s the best case for P2P architecture. Often, it ends up looking more like a Silicon Valley “flat hierarchy”—which really just means everyone is bottlenecked by your coworker Brenda who’s on PTO with a Slack status of “🍷”.

Okay, so Manager vs. Free-for-All—which is better? Usually, the answer is "a bit of both." The hybrid pattern does exactly what it says on the tin: mixes hierarchical control with P2P collaboration.

Imagine teams of agents working together P2P-style on specific, complex sub-tasks (like the research example), but those teams report back to, and are coordinated by, a higher-level Manager agent who's keeping track of the overall goal.

This gives you a nice balance: strategic control from the top, but flexibility and parallelism down where the work gets done. Honestly, most complex, real-world agent systems probably end up looking a lot like your business’s org chart. There’s a reason the structure works.

Choosing the right pattern boils down to your specific needs: how much control vs. flexibility you need, how complex the task is, and the usual system design trade-offs you're already familiar with.

Okay, building these agent systems sounds cool, right? And it is! But let's be real, moving from a single LLM call to a whole crew of agents working together isn't exactly a walk in the park. It brings a new set of headaches.

If you're diving in, here are some of the lovely hurdles you might encounter:

- Resource management: Suddenly, you're not making one LLM call, you're making dozens, maybe hundreds. Plus tools firing off API calls... yeah, your cloud bill and token counts can explode if you're not careful. Time for a second mortgage.

- Conflict resolution: What happens when

AgentAsays the answer is "42" butAgentBinsists it's "Blue"? Or they both try to update the same database record at once? Figuring out how to handle disagreements or conflicting actions without breaking everything is... fun. - Maintaining coherence: If one agent is as smart as DeepSeek and the next is as dumb as Siri, your user is gonna be confused. Keeping a consistent tone and logical flow when multiple agents are involved in a single interaction is tougher than it sounds.

- Debugging complexity: Imagine debugging something where the components themselves are non-deterministic. Was the bug in the code, the prompt, the agent's reasoning, or the interaction between agents? It’s a real problem, but there are a lot of breakthroughs lately.

- Ensuring safety and alignment: Careful system-level constraints and monitoring help you make sure your agent crew doesn’t optimize for paperclip count.

- Ethics of complex systems: Who’s fault is it when something does go wrong, and you can’t even pinpoint the issue? Blaming the Roomba doesn’t scale.

Okay, let's address the elephant in the room: Is AI coming for your web developer job?

Sure, for some parts.

But building, managing, and wrangling these complex agentic systems requires more engineers, not fewer. Someone has to actually make this stuff, and it looks a lot like the work we already do.

Think building these systems requires a whole new brain? Nope. The skills you've honed building complex web apps translate surprisingly well:

- System design and architecture: Choosing between microservices, monoliths, event buses? That's agent system design. Deciding if you need a "Manager" or "P2P" setup? Same skills.

- State management: Every argument you’ve ever had about global state is ready to be had again, now with more hand waving.

- API integration and tooling: Agents need "tools" to act in the world—aka, fancy API wrappers. You've been building reliable API clients for years.

- Debugging and o11y: Debugging distributed systems is hard. Debugging non-deterministic distributed systems is harder. But your debugging instincts and love for good logging? More essential than ever. We need engineers who know how to build transparently. Maybe more than anything else.

- User Experience (UX): Making complex systems usable for humans is just good UX. Figuring out how users interact with, trust, and correct AI agents is the next frontier of UX design, and I’m sure we haven’t seen even 1% of what human-AI interactions can look like.

The next time you see a role called “AI engineer,” don’t just assume the company needs someone with three advanced ML degrees. Think about how your existing experience can cross-apply.*

*(No, sorry, your ability to debug Next.js hydration errors will never, ever matter.)

So, how can you get your hands dirty?

Start by understanding core concepts, like how LLMs work at a high level, and exploring common agentic patterns.

Experimenting is key, so grab a framework like LangChain or the AI SDK—or even mess around with visual tooling like n8n (a personal favorite of mine).

Start with small features, and then try to scale it up. Worried about cost? Great. Cross reference the ChatBot Arena Leaderboard to OpenRouter prices, and see how far you can get with a tiny model and a $5 credit limit. Or, self-host a model with Ollama, and pray your laptop doesn’t combust.

Remember: the LLM shouldn’t be doing the heavy lifting; your infra should. Figure out what LLMs are good at (writing Shakespearean error messages), and what code is good at, and use the best tool for the job.

All in all, I’m guessing you’ll find the barrier to entry a bit lower than you imagined—especially since you can just ask AI for help every time you get confused.

The buzz isn't just about AI getting smarter, but about AI getting more tangible stuff done by working together in organized systems.

While you could argue agentic AI will eventually touch pretty much everything, where will see the needle move in the next year or two? Probably any of the kind of complex, multi-step workflows that need to roll with the punches when things don't go perfectly.

We're already seeing early versions pop up for tasks like:

- Supercharged customer support: Not just canned chatbot answers, but systems that actually dig into a customer's history, figure out what they need, maybe process a return or update an order using real tools, and loop in a human if things get hairy.

- Smarter dev assistants: Moving beyond autocomplete and simple function definition to tools that can chew on requirements, sketch out an implementation plan across multiple files, bang out the code, run the tests, and iteratively fix until those tests pass.

- On-demand data deep dives: Instead of just fetching rows, agents can clean up messy data, run multi-stage analyses ("find me the weird correlations in Q3 sales, but ignore the test accounts"), whip up charts, and give you the TL;DR in plain English. Deep research will only get better from here.

- Automating the annoying bits: Stitching together different apps and internal tools to handle those tedious cross-system workflows—like automatically enriching a new lead, checking if they're already in the CRM, adding them if not, assigning them based on rules, and pinging the right sales rep.

Basically, any system you can think of that can mostly be defined by a casual "if/then" summary? You can probably wire it up with agents.

Of course, actually getting this stuff right means tackling some big open questions, but building robust, responsible, and genuinely useful systems out of messy parts is what web developers do. Our knack for system design, state management, API wrangling, and building for real humans puts us right in the driver's seat for this next wave.

So, let's get building.

Builder.io visually edits code, uses your design system, and sends pull requests.

Builder.io visually edits code, uses your design system, and sends pull requests.

Connect a Repo

Connect a Repo