The fastest Movie in the West!

Introduction

Shipping performant applications is not an easy task. It’s probably not your fault that the app you’re working on is slow. But why is it so difficult? How can we do better?

In this article we’ll try and understand the problem space and how new-generation frameworks might be the way forward for the industry.

Google has been trail-blazing the way we measure performance, and nowadays, Core Web Vitals (CWV) are the de facto best way to tell whether our site's performance is or isn’t a good user experience.

The three main metrics that are measured are

- Largest Contentful Paint (LCP) - how fast a user can see something meaningful on your page.

- First Input Delay (FID) - how fast a user can interact with your content, eg. can a button or drop-down be clicked/touched and work?

- Cumulative Layout Shift (CLS) - how much content moves around or changes which interprets the user experience.

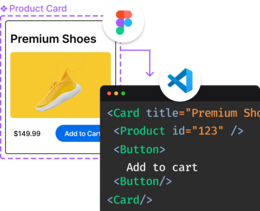

With these measurements, a cumulative score can be extracted, a score from 0-100. Generally, any score above 90 is good. You can measure these metrics yourself in your browser dev tools under the “Lighthouse” tab or use a tool (by Google) like Page Speed Insights.

In recent years, almost every application that has been built uses some sort of framework, like React, Angular, Vue, Svelte, etc. In fact, a large part of the code that is shipped to users is framework code. However, it is more common to see the usage of meta frameworks such as Next.js, Nuxt, Remix, SvelteKit, etc. that take advantage of Server Side Rendering (SSR), which is an old methodology to render your HTML on the server. The upside of this approach is that content could be shown to the user earlier, unlike a complete SPA approach which requires injecting the HTML via JavaScript. The downside is that there might be a cost for the application to bootup until the user can interact with it - this is what’s called hydration.

Comparing between frameworks

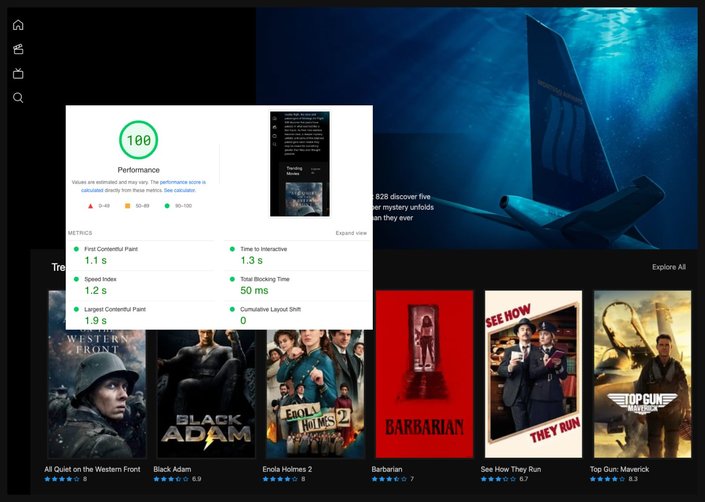

To get some context and data from the wild, we can use Google CrUX (Google Chrome User Experience Report), or more easily, we can use some data extracted by the amazing Dan Shappir, from his recent article in Smashing Magazine.

Dan compared performance between frameworks by looking at websites built with them and only those that have a green CWV score, which should provide the best user experience.

His conclusion is that you can build performant websites with most of the current frameworks, but you can also build slow ones. He also mentions that using a meta framework (or web application framework, as he calls it) is not a silver bullet for creating performant applications, despite their SSG / SSR capabilities.

TasteJS encourages building the same applications across frameworks so that developers can compare the ergonomics and performance of these solutions.

For raw data and the methodology, see our TasteJS Movies Comparison spreadsheet.

Disclaimer: Perf measurement is hard! Chances are we did something wrong! Also, things are never apples-to-apples comparisons. So think of this more as a general discussion of trade-offs rather than a final work on performance. Please let us know if we could improve our methodology or if you think we missed something. Do note that the versions on the different frameworks are not the latest, nor reflect the best stack choices. For example, the Next.js app in these tests is 12.2.5, React 17.0.2, and uses Redux. This is not inline with new and improved approaches that the Vercel team have implemented in Next.js 13 which leverage React 18, React Server Components concurrent features, and generally reduce client side JS.

General methodology: For Page Speed Insights Data we've ran the test 3 times and selected the highest of the 3. Also, these tests are somewhat flaky, like one day the show one score and the next a deviation of 5-10 points up or down.

Worth noting that deploy targets aren't the same which is another variant on performance.

Updated: 11.23 - Some apps may have been worked on since the time of writing this post.

A Qwik community member @wmalarski has created a a Qwik version of the Movies app [repo] [site]. This is an excellent opportunity to compare the performance of the different implementations.

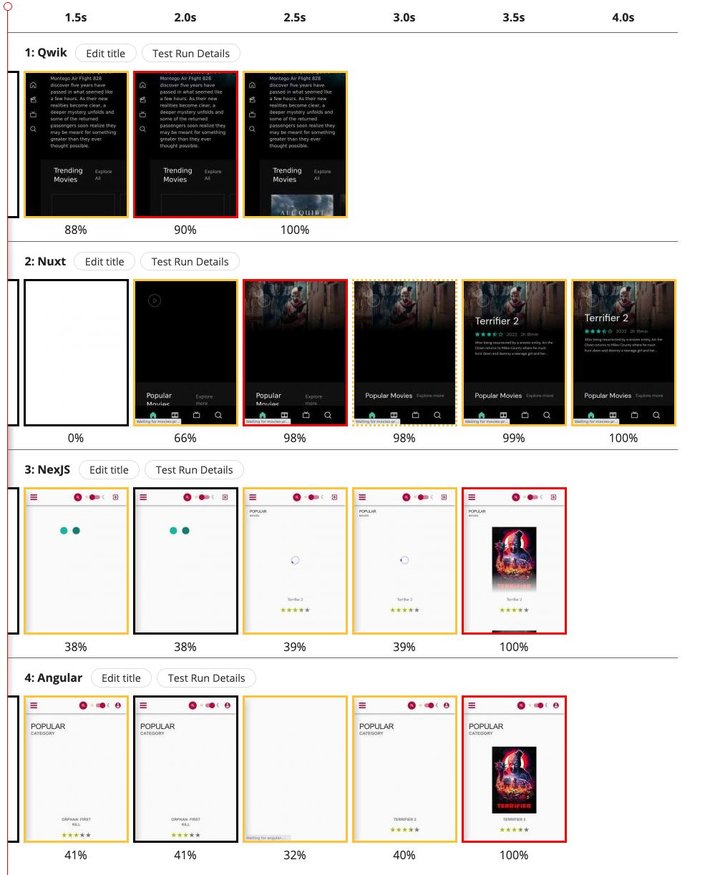

The kind of performance we are interested in is startup performance. How long does it take from the time I navigate to a page until I can interact with that page so that I can get the information I want? Let's start with the filmstrip to get a high-level overview of how these frameworks perform.

The above filmstrips show that the Qwik version delivers content faster (ie. better FCP - First Contentful Paint, as can be seen by the red box) than the other versions. This is because Qwik is SSR/SSG first (Server Side Rendering / Static Site Generation) and specifically focuses on this use case. The text shows up on the first frame and the image shortly after. The other frameworks have a clear progression of rendering where the text content is delivered over many frames, implying client-side rendering. (It's worth noting that although Next.js includes server-rendering by default, the Next.js Movie app does not seem to fully server-render its pages which is likely affecting its results.) Angular Universal is an interesting outlier because it has the text content immediately and then goes to a blank page only to re-render the content (I believe this is because Angular does not reuse the DOM nodes during hydration).

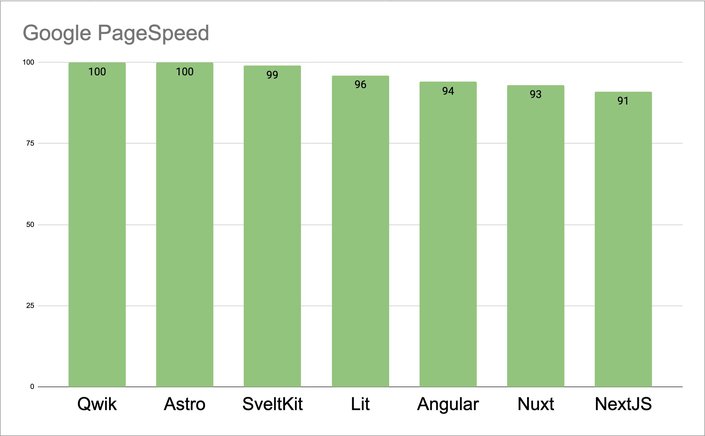

Let's look at the corresponding Page Speed Scores.

We can see that all of the frameworks score high and in the green. But I want to pause here and point out that these are not real-world apps but idealized, simplified examples of an application. A real-world application would have a lot more code, such as preferences, localization, analytics, and so much more. This means that a real-world application would have even more JavaScript. So we would expect that real-world app implementation to put even greater pressure on the browser startup. All of these demos should be getting 100/100 at this point without the developer even trying. Because unless you can get a 100/100 on a demo implementation, a real-world app is unlikely to get anything reasonable.

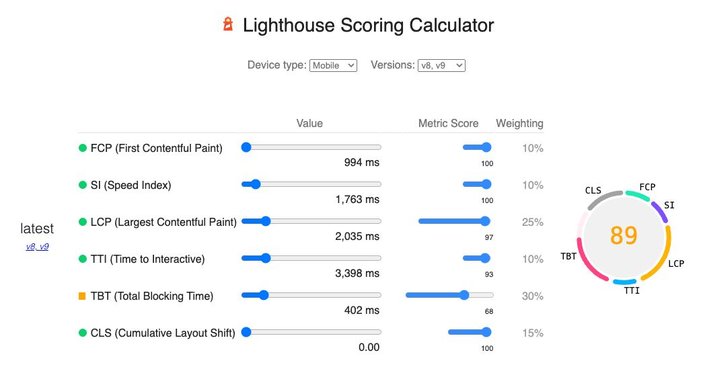

Let's dive deeper into how PageSpeed is calculated.

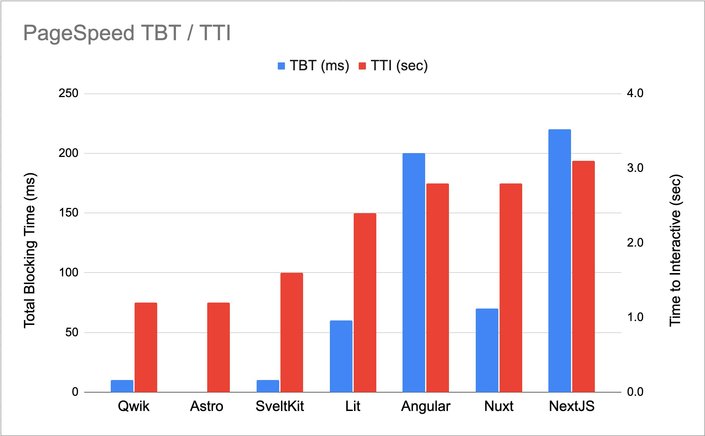

Key drivers of PageSpeed are TBT (Total Blocking Time) and LCP (Largest Contentful Paint). I will also include TTI (Time to Interactive) because that is also JavaScript-dependent.

Let's define them quickly and then explain more about them:

- TBT (Total Blocking Time): The Total Blocking Time (TBT) metric measures the total amount of time between First Contentful Paint (FCP) and Time to Interactive (TTI) where the main thread was blocked for long enough to prevent input responsiveness.

- LCP (Largest Contentful Paint): LCP measures the time from when the user initiates loading the page until the largest image or text block is rendered within the viewport.

- TTI (Time to Interactive): The amount of time it takes for the page to become fully interactive.

As long as the application delivers the content through SSR/SSG, and images are optimized, it should get a good LCP. In this sense, no particular framework has any advantage over any other framework as long as it supports SSR/SSG. Optimizing LCP is purely in the developer's hands, so we are going to ignore it in this discussion.

The place where frameworks do have influence is in TBT and TTI, as those numbers are directly related to the amount of JavaScript the browser needs to execute. The less JavaScript, the better!

Both TBT and TTI are important. TBT measures the longest unbroken chunk of work that frameworks do on startup. A lot of frameworks break up the work into smaller chunks to spread the workload. Qwik has a particular advantage here because it uses resumability. Resumability is essentially free because it only requires the deserialization of the JSON state, which is fast.

TTI measures the amount of time until no more JavaScript is running. Qwik again has an advantage because resumability allows the framework to skip almost all initialization code to get the application running.

The result of resumability cost is clearly evident in the above graph as the one with the least TBT and TTI. It is worth pointing out that the TBT/TTI cost should stay relatively constant for resumability, even as the application gets more complex. With hydration, the TTI will increase as the application gets larger.

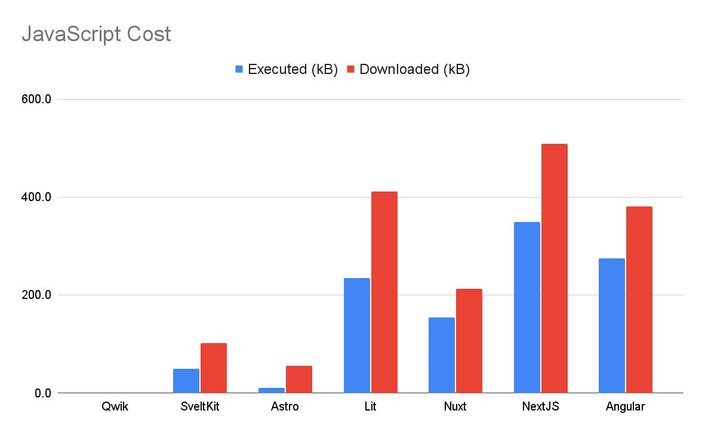

Now let's look at the amount of JS delivered to the browser at startup.

This graph shows the amount of JavaScript delivered to the browser (red) and how much of that JavaScript was executed (blue). Lower is better. We are less likely to have large TBT and TTI with less JavaScript to execute. Delivering and executing less code is the framework's primary influence on application startup performance.

I will claim that your TBT/TTI is directly proportional to the amount of the initial code delivered and executed in the browser. The frameworks need to be in a “lazy loading and bundling” business to have any chance of delivering less code. This is harder than it sounds because a framework needs to have a way to break up the code so that it can be delivered in chunks and a way to determine which chunks are needed and which are not on startup. Except for Qwik, most frameworks keep bundling out of their scope. The result is overly large initial bundle sizes that negatively impact the startup performance.

It is not about writing less code (all of these apps have similar complexity) but about being intelligent about which code must be delivered and executed on startup. Again, these can be complex apps, not just static pages.

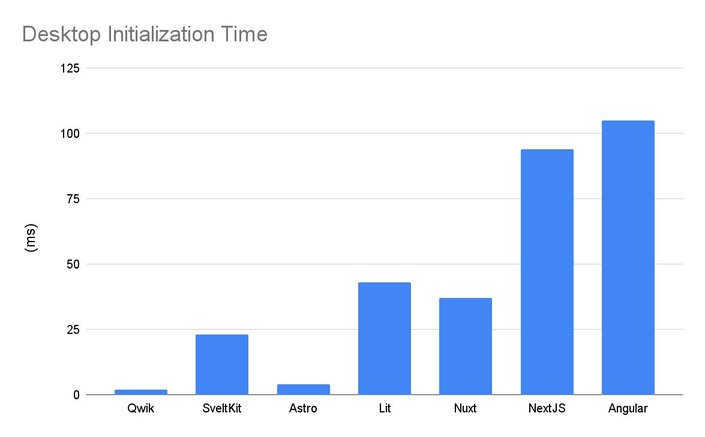

TBT/TTI is PageSpeed's way of looking at startup performance. But there is another way to look at it. The total amount of time spent executing JavaScript before the application's initialization is complete.

WOW, look at the difference between the fastest and slowest. Here, the slowest takes 25 times longer to initialize than the fastest. But even the difference between the fastest and second fastest is still ten times faster. Once again, it shows how much cheaper resumability is than hydration. And this is for a demo app. The difference would become even more pronounced with more real-world applications with more code.

This is rigged

OK, this whole comparison is rigged and unfair. If you look closer at the graph, you realize that startup performance is directly correlated to the amount of JS shipped/executed on the client. This is both exciting and scary! Exciting because it means you have to ship less JavaScript to get good perf. And scary because it means as your application gets bigger, it will get slower. In a way, this is not surprising, but the implications are that all of these demos are not a good predictor of how a real-world application will perform, as a real-world application will have a lot more JavaScript than these demos.

Now, I said this is rigged. The reason why Qwik is cheating is that Qwik knows how not to download and execute most of the application code. That is because resumability gives Qwik a unique advantage. Qwik can keep the initial amount of JavaScript relatively constant even as the application grows.

The total amount of JavaScript created for the Qwik application was 190kB, out of which almost nothing was downloaded and executed on startup. The Qwik application was ported from the Nuxt solution. It is not surprising that both Qwik and Nuxt solutions are both about 200kB each, yet Qwik had to download and execute close to zero JS. This difference will only get more drastic as the size of the application grows.

It should not be the developer's problem

Qwik's goal is to allow performant applications without any investment on the developer's part. Qwik applications are fast because Qwik is good at minimizing the amount of code that needs to be executed at startup and then not shipping the unneeded code. Unlike other frameworks, Qwik has an explicit goal to own the bundling, lazy-loading, pre-fetching, serializing, and SSR/SSG of the application because that is the only way that Qwik can ensure that only the minimal amount of JavaScript needed will execute on startup. Other frameworks either don't focus on these responsibilities or delegate them to meta-frameworks, which have limited ability to influence the outcome.

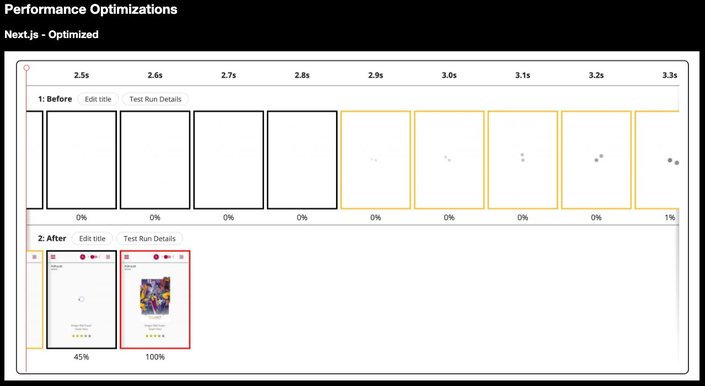

I find it telling that the other solutions presented on the TasteJS site have "optimized" solutions presented. Implying that, at first, the solution was not ideal and that a developer spent time "optimizing it." The Qwik applications presented have not been optimized in any way.

Conclusion

“Movies” is a relatively simple app that does not approach the complexity of real-world applications. As such, all frameworks should get 100/100 at this point without the developer doing any optimization. If you can't get 100/100 on a demo, there is no hope of getting anything close to 100/100 in real production applications that deal with real-world complexity.

These comparisons show that application startup performance is directly correlated to the amount of JS that the browser needs to download and execute on startup. Too much JS is the killer of application startup performance! The frameworks which do best are the ones that deliver and execute the least amount of JS.

The implication of the above is that frameworks should take it as their core responsibility to minimize the amount of JS required by the browser to execute on startup. It is not about developers creating less JS or having to manually tweak and mark specific areas for lazy loading but about frameworks not requiring that all JS be delivered upfront and executed automatically

Finally, while there is always space for developer optimization, it is not something that the developer should do most of the time. The framework should just produce optimized output by default, especially on trivial demo applications.

This is why I believe that a new generation of frameworks is coming, which will focus not just on developer productivity but also on startup performance through minimizing the amount of JavaScript that the browser needs to download and execute on startup.

Nevertheless, the future of JS frameworks is exciting. As we’ve seen from the data, Astro is doing some things right alongside Qwik. However, more noteworthy frameworks such as Marko and Solid are also paving the path forward with some similar traits and better performance benchmarks. We’ve come back full circle in web development - from PHP/Rails to SPAs and now back to SSR. Maybe we just need to break the cycle.

Tip: Visit our Astro hub to learn more.

Introducing Visual Copilot: convert Figma designs to high quality code in a single click.