Large Language Models (LLMs) are changing how we build software, and not in that vague "AI is the future" way that people have been saying for years.

The tool that transforms a Figma design into a fully responsive website? The assistant that writes your unit tests while you focus on core functionality? These aren't hypothetical scenarios; they're happening right now.

The best part is that adding LLMs to your codebase has become surprisingly straightforward. But even with this simplicity, understanding how these models actually work is crucial for using them effectively.

This guide will explain what Large Language Models actually are, how they work behind the scenes, and what you should consider when adding AI to your web projects.

Let's build our understanding step by step, starting with the fundamentals and working our way up to large language models.

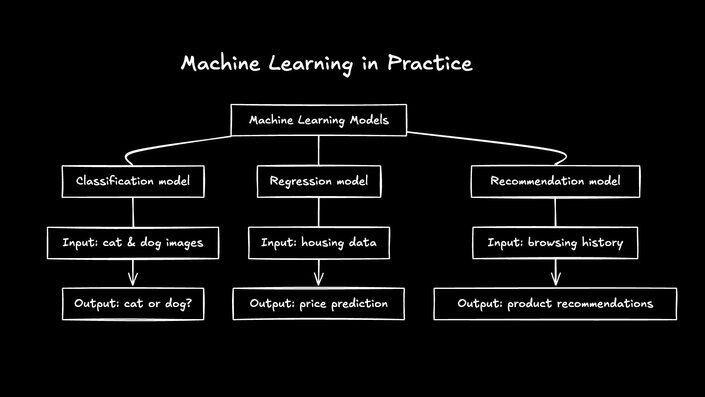

When people talk about AI and machine learning, you'll hear the term "model" thrown around constantly.

A model is basically a mathematical function that transforms inputs into outputs. But unlike traditional programming, where you'd write explicit rules, models learn patterns from data.

Here's what that looks like in practice:

- Feed a model tons of cat and dog pictures, and it learns to tell them apart (classification model)

- Show it housing data, and it figures out how square footage affects the price (regression model)

- Give it your browsing history, and it starts guessing what you might buy next (recommendation model)

The process of training models is structured: you show the model examples, measure its error using mathematical functions (like "how far off was this prediction?"), and then let it adjust its internal parameters to reduce that error. The model uses optimization algorithms to systematically improve its accuracy with each round. Do this thousands or millions of times, and eventually, it gets pretty good at complex tasks.

What makes models powerful isn't fancy math or algorithms (though those help). It's the data. Models are only as good as what they're trained on. Take a housing price predictor, for example. If you only train it on housing prices in a luxurious neighborhood, it's gonna be totally lost when estimating values in rural villages—no algorithm, however sophisticated, can extract patterns from data it hasn't seen before.

In the end, a model is just a function that makes predictions based on patterns it's seen before. It's not magic, just math at scale.

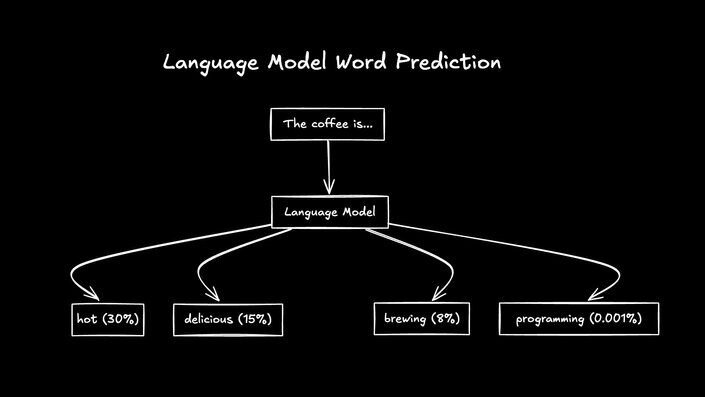

A language model is exactly what it sounds like—a model that works with human language. At its most basic level, it tries to predict what word will come next in a sequence.

When you type "The coffee is...", a language model calculates probabilities. It might think "hot" has a 30% chance of being next, "delicious" 15%, and "programming" basically 0%.

The first language models were pretty basic:

- N-gram models just counted how often word sequences appeared together

- Markov chains looked at the last couple of words to guess the next one

- RNNs (Recurrent Neural Networks) tried to remember context from earlier in the text but weren't great at it

These older models were useful for some tasks but had major limitations. They'd quickly lose track of what was being discussed if the relevant information wasn't within their context window - which ranged from just a few words for n-grams to around 100 words for basic RNNs.

Modern language models are much more sophisticated, but the core idea remains the same: predict what text should come next based on patterns learned from data. The major leap forward came in 2017 with the introduction of the transformer architecture in the paper 'Attention Is All You Need', which revolutionized how models could understand long-range context in text.

A large language model (LLM) is, as the name suggests, a language model that's been scaled up dramatically in three key ways:

- Data: They're trained on vast amounts of textual data—think hundreds of billions of sentences from books, articles, websites, code repositories, and more

- Parameters: They have billions or trillions of adjustable internal values that determine how inputs are processed

- Computation: They need absurd amounts of computing power to train—the kind only big tech companies can typically afford

What's fascinating is that once you scale these models big enough and combine them with advanced architectures, they develop capabilities nobody explicitly programmed. They don't just get better at predicting the next word—they can:

- Generate human-like text that is coherent and lengthy

- Follow complex instructions

- Break down problems step-by-step

- Write working software code

- Understand different contexts and tones in natural language

- Answer questions using information they've absorbed

This emergent behavior surprised even the researchers who built LLMs. Scale unlocks capabilities that smaller models just don't have.

Understanding the theory is one thing, but the real question for developers is: why should I care? The importance of LLMs in web development comes down to their ability to automate tedious tasks, accelerate workflows, and unlock new kinds of user experiences.

For decades, development has been a manual process of translating logic and designs into precise code. LLMs are shifting this paradigm toward AI-assisted development. They excel at handling boilerplate code, generating unit tests, writing documentation, and even debugging tricky issues. This frees up developers to focus on higher-level architecture and solving core business problems, rather than getting bogged down in repetitive coding tasks.

The impact is tangible. Instead of spending hours converting a static design into responsive components, developers can now generate a baseline in seconds. This doesn't replace the developer; it augments them, turning them into editors and reviewers of AI-generated code. This dramatically shortens the design-to-development cycle, a core pain point that tools like Fusion are built to solve, allowing for faster iteration and shipping.

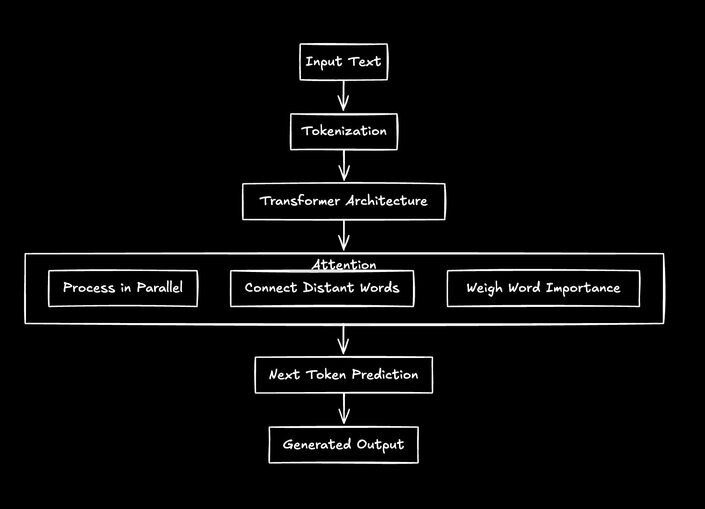

To use LLMs effectively, it's helpful to understand the core mechanics of how they process information and learn. The process can be broken down into a few key stages, from initial text processing to the large-scale training that gives them their power.

When you feed text into an LLM, it doesn't see words or sentences. First, it breaks the input down into smaller units called 'tokens.' A token can be a whole word, a part of a word (like 'ing' or 'pre'), or even a single character. For example, the phrase 'LLMs are powerful' might become ['LL', 'Ms', ' are', ' powerful']. This process, called tokenization, allows the model to handle a vast vocabulary and even unknown words.

The real breakthrough behind modern LLMs is the transformer architecture (the 'T' in ChatGPT). Unlike older models that processed text sequentially, transformers can look at an entire passage at once. The key innovation is the 'attention mechanism,' which allows the model to weigh the importance of different tokens when processing any single token. It can understand that in the sentence 'The developer closed the laptop and put it in her bag,' the word 'it' refers to the 'laptop,' even though they are several words apart. This ability to understand long-range dependencies is what makes LLMs so context-aware.

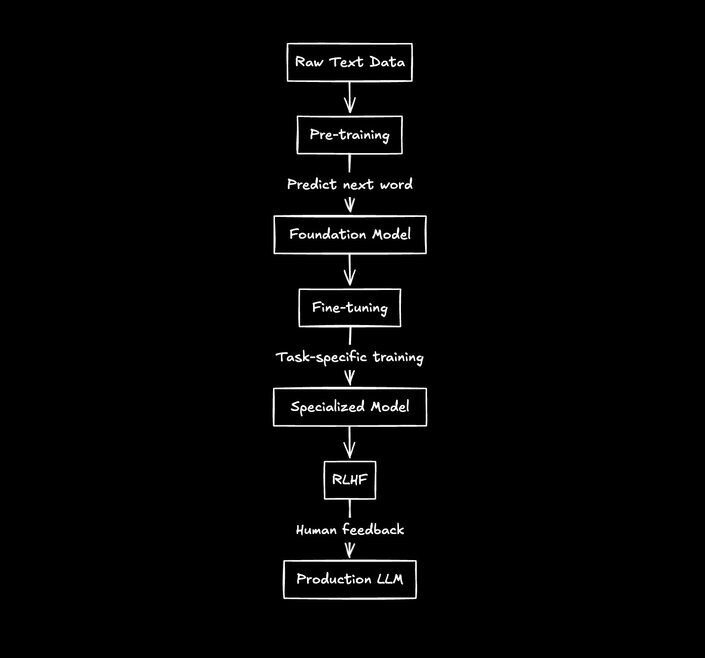

LLM training is a multi-stage process that turns a generic model into a useful tool. It starts with unsupervised learning on a massive scale.

- Pre-training: The model is trained on a massive dataset of text and code from the internet. Its goal is simple: predict the next word in a sequence. By doing this trillions of times, it learns grammar, facts, reasoning abilities, and coding patterns.

- Fine-tuning: The pre-trained model is then trained on a smaller, curated dataset for a specific task, such as following instructions or having a conversation.

- RLHF (Reinforcement Learning from Human Feedback): To make the model safer and more aligned with user expectations, human reviewers rank different model responses. This feedback is used to train a 'reward model,' which then fine-tunes the LLM to produce outputs that humans prefer.

This staged approach is how a model goes from being a raw text predictor to a helpful assistant.

Before diving into a specific implementation, it's useful to see the breadth of what LLMs can do in a web development context. They are not just for chatbots; they are becoming a fundamental part of the developer's toolkit.

This is the most direct application for developers. Tools like GitHub Copilot and integrated IDE features use LLMs to suggest single lines or entire functions of code. This is useful for reducing boilerplate, writing tests, and generating code from natural language comments.

Instead of complex UIs, developers can build conversational interfaces that allow users to get information or perform actions by simply asking. This includes customer support chatbots, AI-powered search for documentation, and in-app assistants.

LLMs can generate marketing copy, blog posts, or product descriptions. For developers, they are especially useful for summarizing technical documentation, generating release notes, or explaining complex code snippets in plain English.

One of the most challenging but high-value use cases is translating visual designs from tools like Figma into production-ready code. This has historically been a major bottleneck in the development process. This specific challenge brings us to a real-world example of how we've tackled this at Builder.io.

At Builder.io, we've focused on solving the bottleneck between design and development. Our experience shows that leveraging LLMs effectively requires more than a single, general-purpose model.

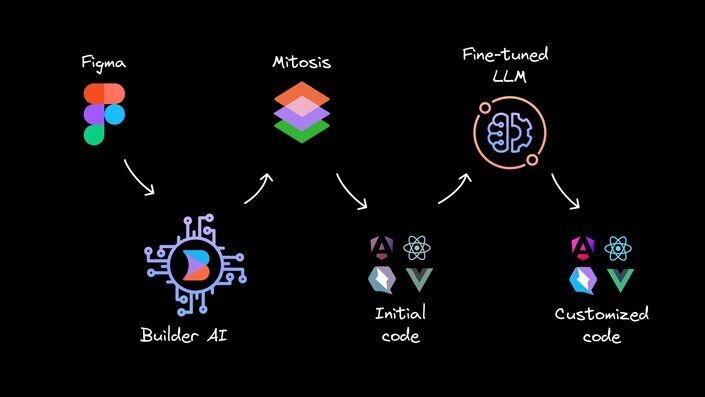

Using the Builder.io Figma plugin, our AI-powered visual IDE, Fusion, uses a pipeline of specialized AI systems to transform Figma designs into clean, responsive code. This isn't a simple one-shot conversion.

- First, a computer vision model analyzes the design to create a structured layout.

- Then, our open-source Mitosis compiler takes this structure and generates code for your chosen framework (React, Vue, Svelte, etc.).

- Finally, a fine-tuned LLM refines the output, cleaning up the code to match your team's specific coding style and standards.

This multi-step, experience-based approach dramatically reduces the time developers spend on the tedious task of translating static designs into interactive components. It allows teams to move faster and focus on the complex logic that truly matters, embodying the collaborative promise of a unified visual IDE.

While LLMs are powerful, building with them in a production environment means facing a unique set of challenges. Being aware of these limitations is the first step to building robust and reliable AI-powered features.

LLMs can confidently generate incorrect or nonsensical information, a phenomenon known as 'hallucination.' For a developer, this could mean generating code with subtle bugs or providing factually wrong answers in a chatbot. Always treat LLM output as a starting point that requires human validation, especially for critical tasks.

API calls to powerful models are not free and can be slow. A feature that feels snappy in development can become prohibitively expensive and sluggish at scale. Token costs, both for input and output, can add up quickly. We'll cover optimization strategies later, but it's a primary constraint to design around from day one.

Sending data to a third-party LLM API can pose privacy risks. You must be careful not to send personally identifiable information (PII) or confidential company data in prompts. For developers, this means being cautious about sending proprietary source code or sensitive user data to the model.

LLMs are trained on vast amounts of internet data, which contains human biases. These biases can surface in the model's output. Furthermore, their non-deterministic nature means you might not get the same answer twice, which can be challenging to manage in production systems that require consistent and predictable behavior.

So how do you actually add LLMs to your web app? Let's focus on the fundamentals: choosing an approach, connecting to the models, and building a robust implementation on both the frontend and backend.

You have two main options, each with trade-offs:

- Managed API services: This is the most common starting point. Services from OpenAI, Anthropic, and Google handle the complex infrastructure for you. You interact with them via a simple REST API. It's fast to set up but gives you less control and has privacy implications.

- Local or self-hosted models: For greater control and privacy, you can run open-source models like Llama or Mistral on your own infrastructure. This requires significant hardware (usually powerful GPUs) and DevOps expertise but can be more cost-effective at scale and keeps data in-house.

Most developers start with APIs and only consider self-hosting for specific needs.

The core of the integration is an API call. Here’s a basic example using fetch to call an LLM API:

async function generateContent(prompt) {

const response = await fetch('https://api.llmprovider.com/v1/chat/completions', {

method: 'POST',

headers: {

'Content-Type': 'application/json',

'Authorization': `Bearer ${API_KEY}`

},

body: JSON.stringify({

model: "gpt-4-turbo",

messages: [{ role: "user", content: prompt }],

temperature: 0.7,

max_tokens: 4096

})

});

const data = await response.json();

return data.choices[0].message.content;

}Key parameters include the prompt, model (affects quality/speed/cost), temperature (creativity), and max_tokens (response length).

When calling an LLM from the client-side, user experience is critical:

- Handle loading states: LLM calls can take seconds. Always show the user that something is happening with a loading spinner or skeleton UI.

- Implement streaming: Don't make users wait for the full response. Use streaming APIs to display text as it's generated, like in ChatGPT. This dramatically improves perceived performance.

- Add retry logic: API calls can fail. Implement a simple exponential backoff strategy to retry failed requests.

For more robust applications, it's better to proxy LLM calls through your backend:

- Asynchronous processing: For long-running tasks, use a job queue to process LLM requests in the background so you don't block the main thread.

- Caching: If you get frequent, identical prompts, cache the responses to reduce latency and save costs.

- Prompt engineering & validation: Your backend can construct more complex, system-level prompts. It's also the right place to validate, sanitize, and post-process LLM outputs before they are stored or sent to the user.

As discussed in the 'Challenges' section, cost can escalate quickly. Here are tactical optimizations:

- Model selection: Use the smallest, cheapest model that can reliably accomplish the task. Don't use GPT-4 for a simple classification task a cheaper model can handle.

- Token optimization: Keep your prompts concise. Instruct the model to be brief. Every token costs money.

- Hybrid approaches: Use LLMs for what they're good at (understanding unstructured text) and use traditional code for everything else (deterministic logic, calculations).

The field of LLMs is evolving at an incredible pace. For web developers, the most exciting trends are not just about bigger models, but about more specialized and efficient ones. We're seeing a shift towards smaller, task-specific models that can run on-device for improved privacy and performance. The rise of multimodal models—which can understand text, images, and audio—will unlock new UI paradigms. Finally, the development of AI agents, which are LLMs that can autonomously use tools and APIs, promises to automate entire development workflows, from creating a ticket to deploying the code that resolves it.

Large Language Models are more than just hype; they are a fundamental shift in how we build for the web. For developers, they represent a powerful new tool for automating tedious work, solving complex problems, and creating more intuitive user experiences. The barrier to entry is lower than ever. By starting with a simple API call, you can begin experimenting and discover how to leverage this transformative technology in your own projects. The key isn't to be an expert overnight, but to start building and learning today.

Builder.io visually edits code, uses your design system, and sends pull requests.

Builder.io visually edits code, uses your design system, and sends pull requests.

Connect a Repo

Connect a Repo