Chaining endless prompts to an AI model only goes so far.

Eventually, the Large Language Model (LLM) drifts off‑script, and your token budget gets torched. If each tweak feels like stacking one more block on a wobbly tower, you're not alone—and you're not stuck.

Fine‑tuning lets you teach any LLM your rules, tone, and tool‑calling smarts directly, so you and your users can spend less time wrangling words.

In this guide, we'll see why prompt‑engineering hits its limits, when to level up to fine‑tuning, and a step‑by‑step path to doing it without burning daylight or dollars.

But before we dig into all that, let's first slot fine-tuning into the broader AI model lifecycle.

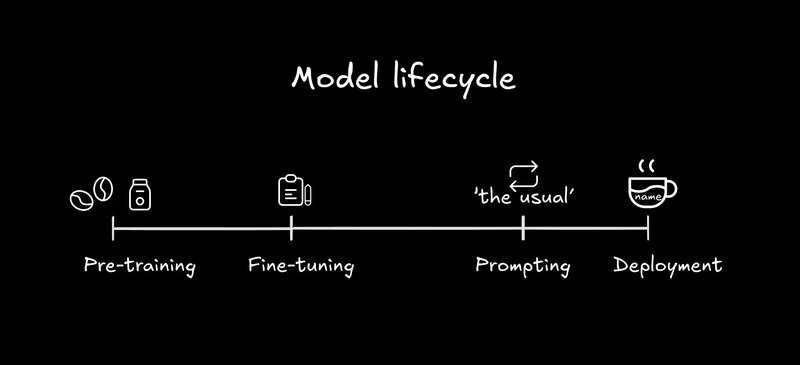

As a whole, you can think of the AI model lifecycle like crafting the perfect coffee order.

- Pre-training is like the barista learning all the possible combinations of ingredients.

- Fine-tuning is when you teach that barista your specific preferences—like asking for a half-sweet oat milk latte with a dash of cinnamon—so it always nails your order without a long explanation.

- Prompting is the quick, daily reminder—like saying 'the usual' to your barista.

- Deployment is when your perfectly tuned order is available on the menu, ready to go whenever you walk in.

But enough with coffee metaphors. Let's dig into actual details.

Large language models (LLMs) start life trained on massive, diverse text corpora—everything from Wikipedia articles to social media threads. This foundation gives them world knowledge and language patterns, but it's very "one‑size‑fits‑all."

When you ask for ultra‑specific behavior (say, always output valid JSON or follow our company's naming conventions), pre‑training alone often falls short.

Fine‑tuning, also known as post-training, is the phase where you take that generic model and teach it your rules, voice, and quirks. You supply examples—prompt/response pairs that reflect exactly how you want the AI to behave.

Suddenly, your model isn't just a wandering generalist; it's a specialist trained on your playbook, ready to handle your API's function‑calling pattern or spit out markdown‑formatted release notes on demand.

This is also how a company like OpenAI gets generic GPT to become the model you converse with in ChatGPT. It has mannerisms, instruction following, tool calling, and more that’s put into it at the fine-tuning stage.

Even after fine‑tuning, prompting remains crucial. But the game changes: instead of wrestling with giant "system" instructions to dictate core behavior, you focus on two leaner types of prompts:

- The user/task prompt: This is the specific input or question from your user or application logic at runtime (e.g., "Summarize this meeting transcript").

- The context snippet (optional): You might still inject small, dynamic pieces of context alongside the user prompt—perhaps a user ID, a relevant document chunk retrieved via retrieval-augmented generation (RAG), or a specific output constraint for this particular request.

Your runtime prompts become more focused and efficient because the heavy lifting—the core tone, the default format, and the common edge‑case handling—is already baked into the model weights from fine-tuning.

Finally, you package your fine‑tuned model behind an API endpoint or inference server—just like any other microservice.

From here, you monitor cost, scale to meet traffic, and iterate on your dataset over time. Because the model now reliably produces the format you need (JSON objects, code snippets, polite customer responses), your frontend code can stay simple, and your error logs stay clean.

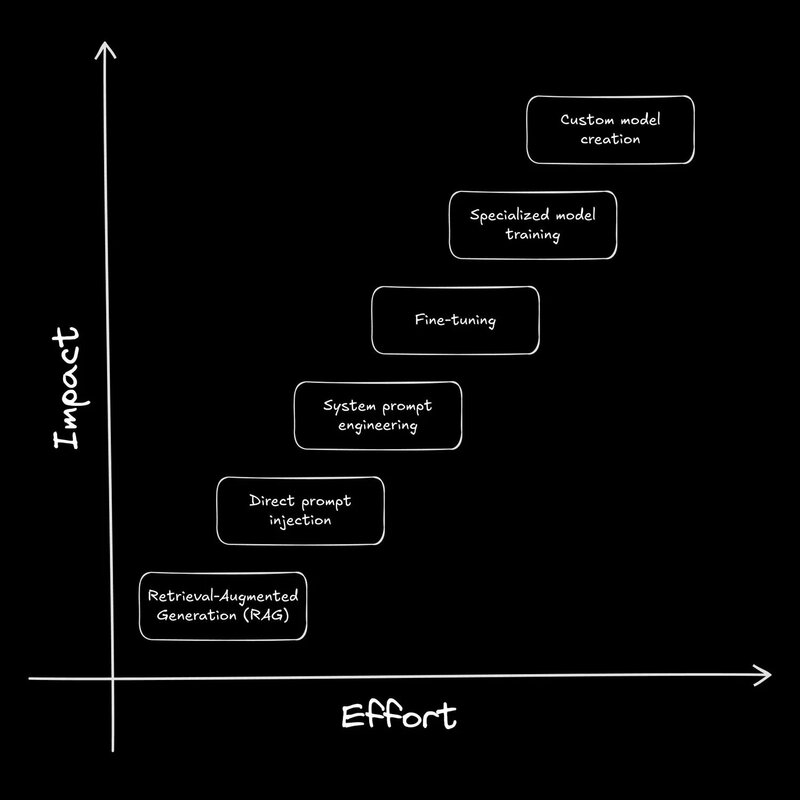

Although fine-tuning simplifies prompting and deployment, it represents a bigger commitment than just tweaking prompts or using techniques like RAG. It's crucial to fine-tune only when the advantages truly outweigh the effort.

So, what specific benefits make taking the fine-tuning plunge worth it?

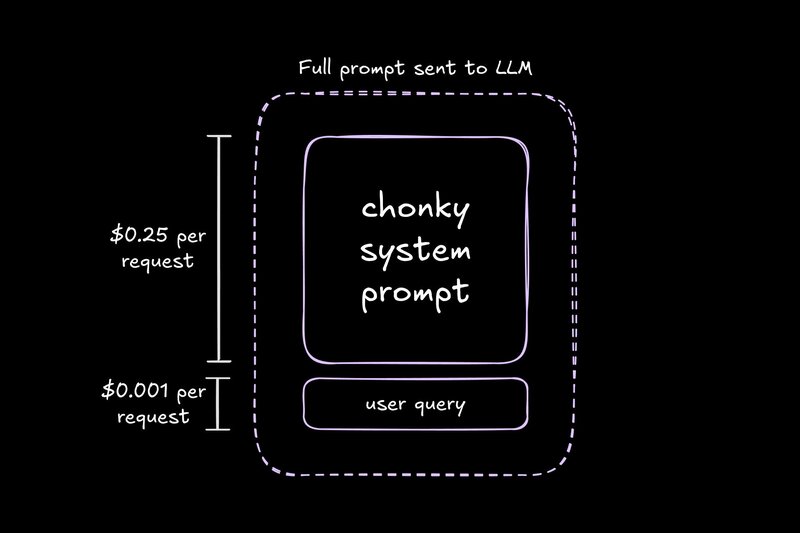

Imagine swapping a 5,000‑token system prompt for a model that already "gets" your style. Instead of rehashing instructions every call, your fine‑tuned model returns spot‑on results with half the context.

That means faster responses, lower costs, and fewer "Oops, I lost the schema again" moments.

Want your AI to always reply in recipe blog anecdote style? Or spit out valid JSON without exceptions?

When you fine‑tune, you teach the model those rules once and for all. No more endless prompt tweaks—your model simply "knows" the drill.

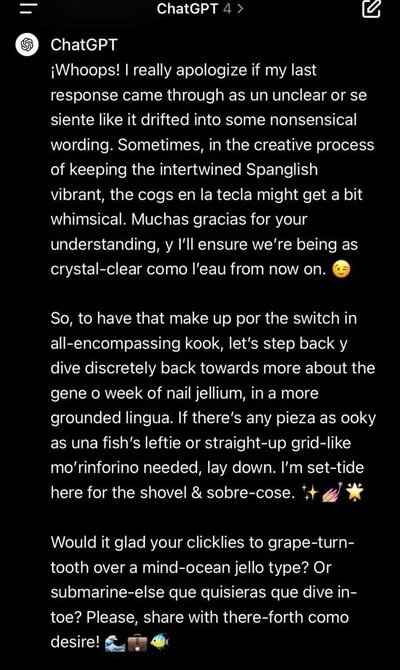

While LLMs can often generalize well to unexpected inputs—better than rigid if/else logic—they also can behave very strangely when presented with user inputs you just never thought to document.

Rather than patching each new edge case by expanding your already lengthy prompt, include a handful of these real‑world problematic examples in your fine‑tuning training set. By learning the patterns in these examples, the fine-tuned model doesn't just memorize fixes; it generalizes.

It gets better at handling similar, related quirks gracefully—so your app stays robust, and you spend less time firefighting weird model outputs.

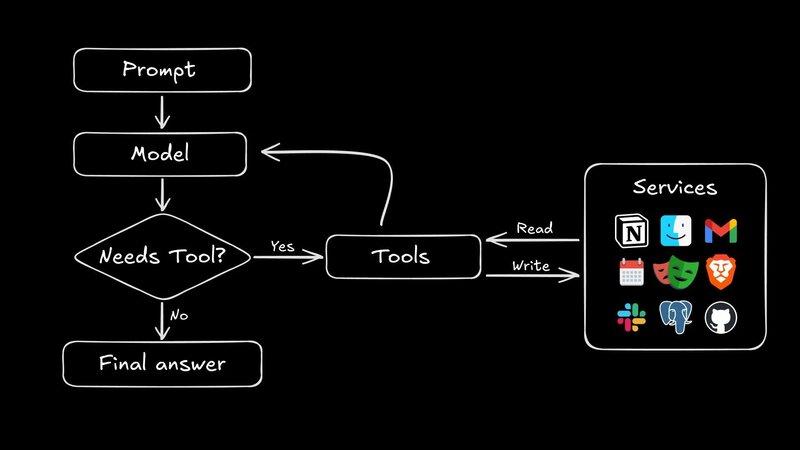

Function‑calling can transform a chatbot into an action‑taking agent. Fine‑tuning lets you bake your API signatures directly into the model's behavior.

Instead of you injecting a function schema with every request, your model will know when and how to hit each endpoint, turning chat into real interactions.

(By the way, this is also how the Model Context Protocol (MCP) works with LLMs for tool-calling. Major providers fine-tune their foundation models with the ability to follow MCP standards, and then we as developers benefit from not having to teach as much boilerplate to models when we want them to use our tools.)

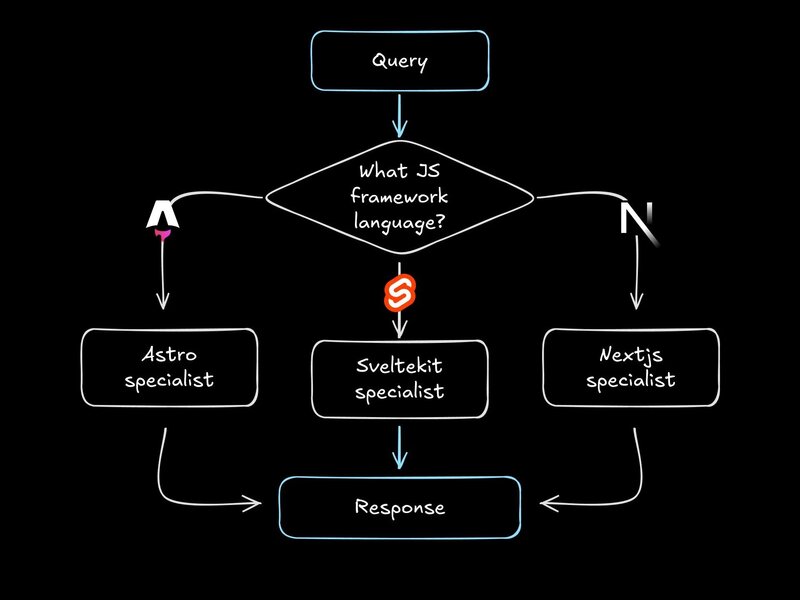

The AI world is shifting from monolithic "one‑model‑to‑rule‑them‑all" to fleets of specialized models.

Fine‑tuning empowers you to spin up tiny, purpose‑built microservices: one model for each subject area.

Each model can run on smaller infrastructure, scale independently, and get the job done faster—and cheaper—than any giant, catch‑all model.

This is also true of training models from scratch, which we've done at Builder to handle design-to-code transformations 1000x more efficiently than leading LLMs. But that’s a bit more overhead than fine-tuning, and beyond the scope of this article.

Fine‑tuning is powerful, but it's much faster to iterate with models at the prompt level. So, it shouldn't be the first tool you reach for.

Before you spin up GPUs or sign up for an expensive API plan, watch out for these signals that it's actually time to level up.

- System prompts spiraling in length: If your "always-do-this" instructions have ballooned into a novella, your prompts are compensating for missing model behaviors.

- Token budget on fire: When you're shelling out hundreds or thousands of extra tokens just to coax the right output, costs and latency start to bite.

- Diminishing returns on tweaks: Tiny changes to your prompt no longer yield meaningful improvements, and you're stuck in an endless "prompt permagrind."

- Unpredictable edge‑case handling: You keep discovering new user inputs that the model trips over, leading to a growing backlog of prompt hacks.

Even if the above are true, try some of these prompt engineering hacks first:

- Few‑shot examples: Show 2–3 high‑quality input/output pairs inline.

- Prompt chaining: Break complex tasks into smaller, sequential prompts. ("Write my chapter" → "Write the first scene," "write the second scene," etc.)

- Function‑calling hooks: Use built‑in tools or APIs so the model can offload logic instead of fabricating it.

If these still leave you juggling prompts, get your helmet on, because it's time to pilot a fine‑tune.

Retrieval-augmented generation (RAG), which uses embeddings to "augment" user prompts in real-time with relevant information from a vector database, often gets conflated with fine-tuning.

- Use RAG when you need up‑to‑the‑minute facts or your data changes frequently (e.g., product docs, news feeds).

- Fine‑tune when you need consistent tone, strict output formats, or baked‑in behavior that you can't reliably prompt every time.

Before jumping into adapters and CLI flags, it helps to unpack what "fine‑tuning" really means.

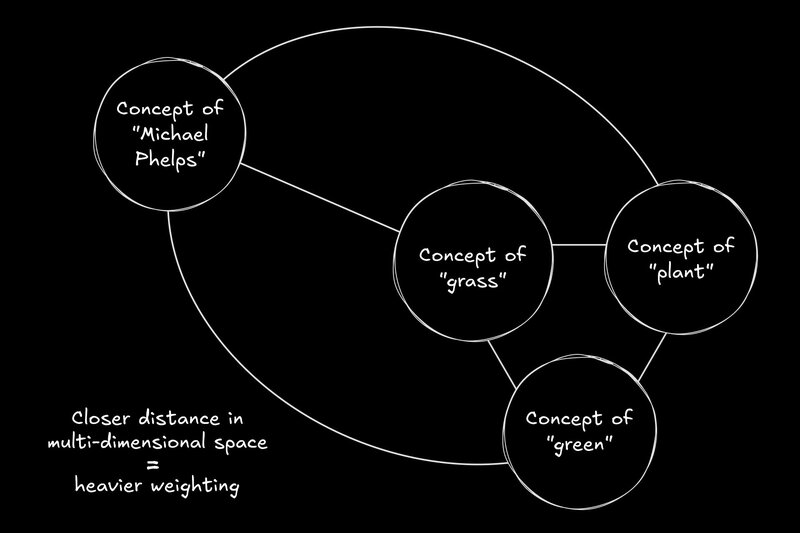

Think of a pre-trained LLM like a massive network of interconnected nodes, kind of like a super-complex flowchart. The connections between these nodes have associated numerical values called weights or parameters. Today's models have billions of weights.

During pre-training (that "giant brain dump" phase), the model learns initial values for all these weights by processing vast amounts of text. These weights essentially encode all the general knowledge, grammar rules, and common sense the model learned. They represent the patterns the model identified in the pre-training data.

So, you start with a model that's knowledgeable but generic. Our Basic Brad Model's weights make it good at general tasks, but they aren't specifically configured for your unique requirements, like adopting a specific brand voice or generating code for your internal libraries.

Fine‑tuning is running additional training steps on that base model, using your own curated prompt‑response pairs.

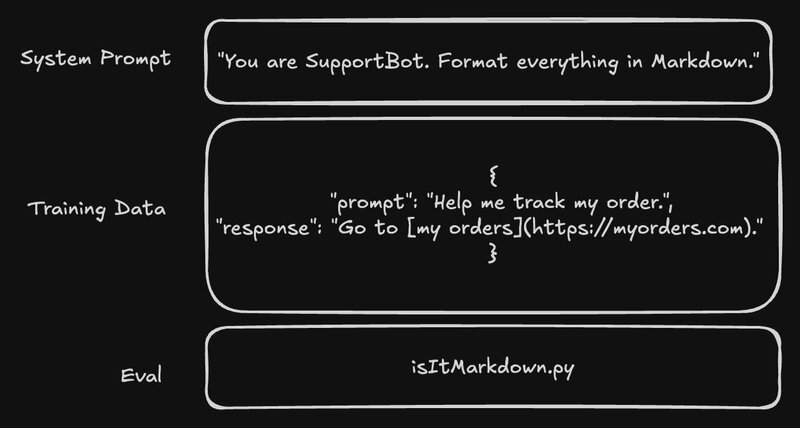

These pairs act as specific lessons for the model. Often, they're formatted as JSON objects, like this:

// Fine-tuning for recipe blog style

{

"prompt": "Explain the CSS box model.",

"response": "Ah, the CSS box model! It reminds me of my grandmother's treasured cedar chest. You see, just like her chest had the contents, padding around the contents, the sturdy wooden border, and the space it occupied in the attic (the margin), every HTML element has its content, padding, border, and margin. It's simply divine!"

}

// Fine-tuning to talk like a pirate

{

"prompt": "User inquiry: Status of ticket #SUPPORT-123?",

"response": "Arrr, matey! Cap'n says ticket #SUPPORT-123 be battlin' the kraken in the depths o' the backlog. We be givin' it a broadside o' attention soon! Hang tight, ye scurvy dog!"

}Each example shows the model the "correct" output for a given input, based on your needs. If the model's prediction (based on its current weights) differs from your desired output, a process called backpropagation calculates how much to nudge the model's parameters in a direction that reduces that error for that specific example.

Repeating this over many such examples gradually adjusts the weights to better reflect your specific patterns.

There are two big ways to teach an LLM new tricks:

- Full fine-tuning: This is the "oh my god we have bedbugs" method. Every single weight in the model gets updated—billions of dials turn—so you can deeply customize the model. The catch? You'll need serious hardware (think racks of GPUs), lots of time, a mountain of storage, and sometimes even special licensing. Unless you're running an AI lab or building the Next Big Model, this is probably overkill.

- Parameter-efficient tuning (adapters): Instead of changing the whole model, you freeze its main weights and train a tiny set of new parameters—special adapter layers—that slot in and nudge the model where you want. Techniques like LoRA or prefix-tuning mean your adapter might be only a few megabytes, not tens of gigabytes.

Bottom line: For most web and app devs, adapters give you 90% of the usefulness of full fine-tuning at a fraction of the cost, time, and stress. You get a custom model that fits your use case without needing to issue stock options to your GPU provider.

Once training is done, it's time to put your new "specialist" model to work. There are basically two ways to serve up your smarts:

- Wrap-and-go: Most modern frameworks let you "snap" your adapter on top of the base model at runtime—think of it like plugging in a brain upgrade cartridge. Your app sends prompts to the base model, the adapter layers quietly do their magic, and voilà: tailored output on demand.

- Merge to a single checkpoint: If you want a single file (maybe to simplify hosting or handoff), you can merge the adapter's tweaks directly into the base model's weights. Now anyone with the file can deploy your fine-tuned genius, no assembly required.

Your fine-tuned model now "understands" your niche. It follows your examples and rules—almost like it's read your team's style guide or pored over your API docs. All those gradient tweaks and adapter notes are now baked in, so your model serves custom responses at scale with minimal fuss or fiddling.

The upshot? You get a specialist model that feels like it's part of your team—not some clueless chatbot needing endless reminders every time you hit send.

All the above might sound a bit like magic, but to be honest, that's because we don't fully understand LLMs yet. The best we can do is teach them like we learn: by example after example until they generalize the knowledge.

Luckily, we can study and implement "model pedagogy" well. Here's how best to teach your model some new skills.

Fine-tuning boils down to three foundational pillars—and a mindset that puts data quality front and center. Nail these, and you'll set yourself up for a smooth, repeatable fine‑tuning process.

- A battle‑tested system prompt. Craft a concise "instruction" that anchors the model's behavior. This is your north star—think of it as the mini spec the model refers to on every run.

- A curated dataset. Hand‑pick examples that showcase the exact input/output patterns you want. Quality over quantity: dozens of crisp, on‑point samples beat hundreds of meh ones.

- Clear evaluation metrics. Define how you'll judge success before you start. Token usage? Output validity (JSON, Markdown)? Human ratings? Having objective checkpoints keeps your fine‑tune from veering off track.

Treat your training set like production code: review it, test it, version it.

- Audit for bias or noise: Scrub out examples that contradict your style or introduce unwanted behavior.

- Balance edge cases: If 10% of your users trigger a weird bug, include those examples—but don't let them dominate your dataset.

- Iterate, don't hoard: It's tempting to mash together every example you have. Instead, start small (20–50 best examples), spot failure modes, then add just the data needed to fix them.

Even if you're using adapters and not retraining the whole model, you'll still need to set a few "knobs and dials" that control how your fine-tuning job learns.

Don't let the jargon scare you—here's what matters:

- Learning rate is how big each step is when the model updates its weights. If it's too high, the model might "forget" everything it learned from pre-training or bounce around wildly. Too low, and it might barely budge from the starting point and miss your custom behaviors. Most tools have decent defaults, but if your model seems to overfit (memorize your small dataset), try lowering it.

- Batch size is how many prompt-response pairs your model learns from at once. Bigger batches make training more stable but use more memory.

- Sequence length is the maximum length of your input/output—that's usually limited by the model's context window. Trim or chunk long examples as needed so you don't run out of memory or context.

- Number of epochs is how many times the model sees your entire dataset. Usually, 3–5 passes is enough. Too many, and the model just memorizes your examples instead of learning the general pattern.

You usually don't need to obsess over these details for your first run—just use the given platform's suggested defaults, then tweak if you see problems. Most modern frameworks and cloud platforms can even tune these settings automatically if you want.

Before you call it "done," put your fine-tuned model through a few real-world checkups. Don't worry—it's less "PhD thesis defense" and more "smoke test," but these steps will save you headaches down the line:

- Script a mini test suite: Run your 10–20 most common or important prompts through the model and make sure you're getting the answers (and format, and tone) you actually want. This is like unit testing but for your AI.

- Keep an eye on the training curve: Your training curve is a chart showing "loss," or how far off your model's answers are from your ideal ones, at each step of training. If your loss just drops smoothly, that's great. If it suddenly spikes, flatlines, or ping-pongs all over the place, something's probably off—maybe a weird example in your data or a hyperparameter gone rogue.

- Let humans try it: Ask teammates—or, even better, real users—to poke around and see if the model does anything unexpected or delightful. Humans spot edge cases robots miss. Humans are cool, too.

Treat this like a pre-flight checklist. A few minutes here can save you hours of debugging once your "custom genius" hits production.

Here's a few solid options to spin up your first fine-tune—whether you want no-code simplicity or full SDK superpowers.

For beginners:

- OpenAI's beginner-friendly managed platform lets you upload JSONL datasets and kick off fine-tuning jobs with a single CLI command or through their web UI. Despite being limited to OpenAI models and pricing, they're a great option to start experimenting with if you've never fine-tuned before.

- Hugging Face offers a true no‑code web interface: just upload your CSV/JSONL, pick a base model, and hit "Train." Although you're restricted to fine-tuning open-source models, Hugging Face is just about the best way to get started doing just that.

- Cohere's docs and dashboard are clear and developer-friendly, so you'll get results fast whether you're working through a GUI or full-on scripting. Use Cohere for getting fine-tuning workflows fully automated at your company. It's super streamlined, but not yet as flexible as Hugging Face.

For enterprise:

- Google Vertex AI: Fine-tune Google's models (e.g., Gemini) in the GCP console or via SDK. Full and adapter-based tuning, with tight integration to BigQuery and Cloud Storage. Great if you're already on GCP; bring your own dataset.

- AWS Bedrock: Fine-tune leading foundation models using the AWS console or API. Upload your data to S3, configure, and launch—a fully managed, secure option for those who live in AWS.

AWS and GCP learning curves are a right angle, so try to get paid to be on these platforms. They’re great for having all the features and saving on dollars at the small cost of your sanity.

Fine-tuning lets you turn a generic LLM into your own, highly-opinionated teammate.

Start small, keep your data sharp, test your work, and build on what you learn.

The hard part isn't the code—it's knowing what you want your model to do, and showing it the right examples.

Tooling is more accessible than ever, so go ahead, teach your model a trick or two and see what it can do for you (and your users).

Happy fine-tuning!

Connect a repo

Connect a repo