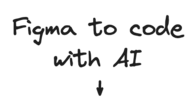

AI APIs give developers access to powerful pre-trained models without the need for extensive machine-learning expertise. Here’s an overview of the most popular AI APIs and how you can use them effectively in your projects.

- Save development time: Instead of spending months training your models, you can start using advanced AI capabilities immediately.

- Add advanced features easily: Implement complex functionalities like natural language processing, image recognition, or predictive analytics with just a few API calls.

- Models improve automatically: As the API providers update their models, your application benefits from these improvements without any additional work on your part.

- Cost-effective: For most use cases, using an API is significantly cheaper than building and maintaining your AI infrastructure.

- Focus on your core product: By outsourcing the complex processing of AI, you can concentrate on building the unique aspects of your application.

OpenAI's API offers access to various language models, including their o1 and o3 mini reasoning models. These models are designed to handle various language tasks with improved capabilities.

Features:

- Advanced text generation and completion

- Improved reasoning and task performance

- Enhanced multilingual capabilities

- Fine-tuning options for custom use cases

Here's a quick example of how you might use it:

const { Configuration, OpenAIApi } = require("openai");

const configuration = new Configuration({

apiKey: process.env.OPENAI_API_KEY,

});

const openai = new OpenAIApi(configuration);

async function generateBlogOutline(topic) {

const completion = await openai.createChatCompletion({

model: "o1",

messages: [

{role: "system", content: "You are a helpful assistant that creates blog outlines."},

{role: "user", content: `Create an outline for a blog post about ${topic}`}

],

});

console.log(completion.data.choices[0].message.content);

}

generateBlogOutline("The future of remote work");

Pricing: OpenAI uses a per-token pricing model. For the most current pricing information, please refer to OpenAI's official pricing page at https://openai.com/api/pricing/. Prices may vary based on the specific model and usage volume.

Note: The pricing structure for the o1 models may differ from previous models. Always check the official pricing page for the most up-to-date information before integrating the API into your projects.

Anthropic recently launched Claude 3.7 Sonnet, their latest model to date. This hybrid reasoning model significantly improves coding, content generation, data analysis, and planning.

Features:

- Enhanced reasoning and task performance

- Advanced coding capabilities

- 200K context window

- Computer use capabilities (in beta)

- Extended thinking mode for complex tasks

Sample use case: Creating an AI research assistant

const Anthropic = require('@anthropic-ai/sdk');

const anthropic = new Anthropic({

apiKey: 'your-api-key',

});

async function researchTopic(topic) {

const prompt = `Human: Provide a comprehensive overview of the latest research on ${topic}.

Include key findings, methodologies, and potential future directions.

Assistant: Certainly! I'll provide an overview of the latest research on ${topic}. Here's a summary of key findings, methodologies, and future directions:

1. Key Findings:

`;

const response = await anthropic.completions.create({

model: 'claude-3.7-sonnet',

prompt: prompt,

max_tokens_to_sample: 1000,

});

return response.completion;

}

researchTopic("quantum computing")

.then(researchSummary => console.log(researchSummary))

.catch(error => console.error('Error:', error));

Pricing: As of February 2025, Anthropic's pricing for Claude 3.7 Sonnet starts at $3 per million input tokens and $15 per million output tokens. They offer up to 90% cost savings with prompt caching and 50% cost savings with batch processing. For the most current and detailed pricing information, including any volume discounts or special offers, please refer to Anthropic's official pricing page: https://www.anthropic.com/pricing#anthropic-api

Claude 3.7 Sonnet offers a balance of advanced capabilities and competitive pricing, making it a strong contender in the AI API market. It's particularly well-suited for complex tasks like coding, content generation, and data analysis. The model is available through the Anthropic API, Amazon Bedrock, and Google Cloud's Vertex AI.

Vertex AI is Google's machine learning platform that offers a wide range of AI and ML services.

Features:

- Access to multiple models, including Google's own (like Gemini Flash 2.0) and third-party options (such as Claude)

- Strong integration with other Google Cloud services

- Automated machine learning features for custom model training

Sample use case: Sentiment analysis of customer reviews

const aiplatform = require('@google-cloud/aiplatform');

async function analyzeSentiment(text) {

const {PredictionServiceClient} = aiplatform.v1;

const client = new PredictionServiceClient({apiEndpoint: 'us-central1-aiplatform.googleapis.com'});

const instance = {

content: text,

};

const instances = [instance];

const parameters = {

confidenceThreshold: 0.5,

maxPredictions: 1,

};

const endpoint = client.endpointPath(

'your-project',

'us-central1',

'your-endpoint-id'

);

const [response] = await client.predict({

endpoint,

instances,

parameters,

});

return response.predictions[0];

}

analyzeSentiment("I absolutely love this product! It's amazing!")

.then(sentiment => console.log(sentiment))

.catch(error => console.error('Error:', error));

Pricing: Vertex AI uses a pay-as-you-go model for prediction. The cost is based on the number of node hours used. Prices vary by region and machine type. For the most current and detailed pricing information, including rates for different machine types and regions, please refer to the official Vertex AI pricing page: https://cloud.google.com/vertex-ai/pricing

Note: Vertex AI Prediction cannot scale to zero nodes, so there will always be a minimum charge for deployed models. Batch prediction jobs are billed after completion rather than incrementally.

AWS Bedrock provides a single API to access various pretrained models from different providers.

Features:

- Access to models from Anthropic, AI21 Labs, Cohere, and Amazon

- Seamless integration with other AWS services

- Fine-tuning and customization options

Sample use case: Building a multi-lingual chatbot

const AWS = require('aws-sdk');

// Configure AWS SDK

AWS.config.update({region: 'your-region'});

const bedrock = new AWS.BedrockRuntime();

async function translateAndRespond(userInput, targetLanguage) {

const prompt = `Human: Translate the following text to ${targetLanguage} and then respond to it:

${userInput}

Assistant: Certainly! I'll translate the text and then respond to it in ${targetLanguage}.

Translation:

`;

const params = {

modelId: 'anthropic.claude-v2',

contentType: 'application/json',

accept: 'application/json',

body: JSON.stringify({

prompt: prompt,

max_tokens_to_sample: 300,

temperature: 0.7,

top_p: 1,

stop_sequences: ["\n\nHuman:"]

})

};

try {

const response = await bedrock.invokeModel(params).promise();

const responseBody = JSON.parse(response.body);

return responseBody.completion;

} catch (error) {

console.error('Error:', error);

throw error;

}

}

translateAndRespond("What's the weather like today?", "French")

.then(result => console.log(result))

.catch(error => console.error('Error:', error));Pricing: AWS Bedrock pricing model allows for flexible use of different AI models while maintaining a consistent API, making it easier to experiment with various options or switch providers as needed. Check out their pricing page: https://aws.amazon.com/bedrock/pricing/.

Both Groq and Cerebras offer impressive performance gains for open-source models, enabling developers to run popular OSS models at speeds that were previously unattainable.

Groq is an AI hardware company advancing high-speed inference for large language models. Founded by former Google TPU architect Jonathan Ross, Groq has developed a unique architecture called the Language Processing Unit (LPU), which is designed to perform well in sequential processing tasks common in natural language processing.

The company's LPU-based inference platform offers several key advantages:

- Deterministic performance: Unlike traditional GPUs, Groq's LPUs provide consistent, predictable performance regardless of the input, which is crucial for real-time applications.

- Low latency: Groq's architecture is optimized for minimal latency, often achieving sub-100ms response times even for complex language models.

- Energy efficiency: The LPU design allows for high performance with lower power consumption compared to many GPU-based solutions.

- Scalability: Groq's infrastructure is designed to scale effortlessly, maintaining performance as demand increases.

Groq offers access to a variety of popular open-source models, including variants of LLaMA, Mixtral, and other advanced language models. Their platform is particularly well-suited for applications that require rapid response times, such as real-time chatbots, code completion tools, and interactive AI assistants.

Features:

- Extremely low latency (often sub-100ms)

- Support for popular open-source models

- Easy integration with existing workflows

Sample use case: Real-time code completion

const Groq = require('groq-sdk');

const client = new Groq({

apiKey: "your-api-key"

});

async function completeCode(codeSnippet) {

try {

const chatCompletion = await client.chat.completions.create({

messages: [

{

role: "system",

content: "You are a helpful coding assistant. Complete the given code snippet."

},

{

role: "user",

content: `Complete this code:\n\n${codeSnippet}`

}

],

model: "llama2-70b-4096",

max_tokens: 100

});

return chatCompletion.choices[0].message.content;

} catch (error) {

console.error('Error:', error);

throw error;

}

}

const code = "def fibonacci(n):\n if n <= 1:\n return n\n else:";

completeCode(code)

.then(completedCode => console.log(completedCode))

.catch(error => console.error('Error:', error));

Pricing: Groq uses a token-based pricing model, with costs varying by model and input/output. Groq also offers vision and speech recognition models with separate pricing structures.

For the most current and detailed pricing information, including rates for different models and capabilities, please refer to the official Groq pricing page: https://groq.com/pricing/

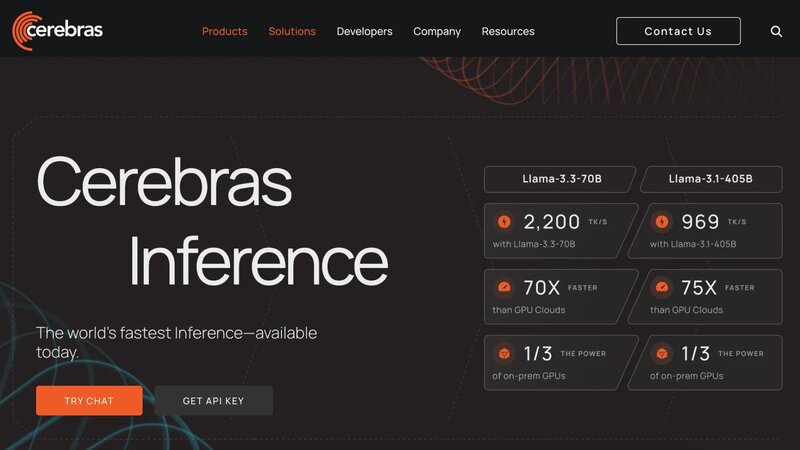

Cerebras is another AI hardware company known for developing the Wafer-Scale Engine (WSE) – the largest chip ever built. Their CS-2 system, powered by the WSE-2, is designed to accelerate AI workloads significantly.

Key aspects of Cerebras' technology:

- Large on-chip memory: The WSE-2 contains 40GB of on-chip memory, reducing data movement and improving efficiency.

- Fast data transfer: With 220 petabits per second of memory bandwidth, Cerebras systems can rapidly process complex AI models.

- Scalability: Cerebras' technology is designed to scale from single models to large, multi-cluster deployments.

- Model support: Capable of running a wide range of large language models, including open-source options.

Cerebras offers cloud-based access to their AI computing capabilities, allowing developers to leverage high-performance hardware for training and inference tasks without needing on-premises installation.

Features:

- High throughput for batch processing

- Support for custom model deployment

- Scalable for enterprise-level applications

Sample use case: Bulk text classification

// Note: This is a conceptual example

const CerebrasApi = require('cerebras-api');

const client = new CerebrasApi('your-api-key');

async function classifyTexts(texts) {

try {

const responses = await client.batchClassify({

model: "cerebras-gpt-13b",

texts: texts,

categories: ["Technology", "Sports", "Politics", "Entertainment"]

});

return responses;

} catch (error) {

console.error('Error:', error);

throw error;

}

}

const articles = [

"Apple announces new iPhone model",

"Lakers win NBA championship",

"Senate passes new climate bill"

];

classifyTexts(articles)

.then(classifications => {

articles.forEach((text, index) => {

console.log(`Text: ${text}\nClassification: ${classifications[index]}\n`);

});

})

.catch(error => console.error('Error:', error));

Pricing: Cerebras typically offers custom pricing based on usage volume and specific requirements.

When selecting an API, consider these factors:

- Required features: Does the API support the specific AI capabilities you need?

- Cost: How does the pricing fit your budget and expected usage?

- Quality of documentation: Is the API well-documented and easy to integrate?

- Scalability: Can the API handle your expected growth?

- Latency requirements: Do you need real-time responses, or is some delay acceptable?

- Data privacy: How does the API provider handle data, and does it comply with relevant regulations?

- Customization options: Can you fine-tune models or adapt them to your specific use case?

- Community and support: Is there a strong developer community and reliable support from the provider?

- Keep API keys secure: Use environment variables or secure vaults to store API keys, never hardcode them.

- Monitor usage: Set up alerts for unusual spikes in API calls to avoid unexpected costs.

- Control request frequency: Respect API rate limits and implement your own rate limiting to prevent overuse.

- Plan for errors: Always include error handling to manage API downtime or unexpected responses.

- Cache responses: For non-dynamic queries, consider caching responses to reduce API calls and improve performance.

- Stay updated: Follow the API provider's changelog and update your integration regularly to benefit from new features and improvements.

- Optimize prompts: For language models, crafting effective prompts can significantly improve results and reduce token usage.

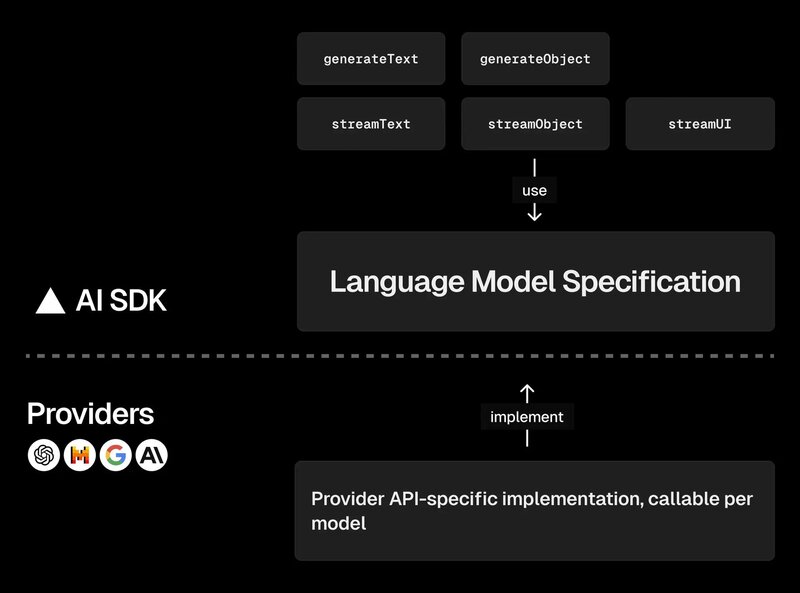

The AI SDK offers a consistent interface for multiple AI providers. It simplifies the process of working with various AI models and services by hiding the differences between providers.

This approach reduces development time and code complexity, allowing developers to switch between or compare different AI services easily. The SDK is particularly useful when:

- Experimenting with different providers to find the best fit

- Building applications that need to switch between providers dynamically

- Creating fallback mechanisms to handle API outages

Here's a quick example of using the AI SDK with multiple providers:

import { OpenAIProvider, AnthropicProvider, VertexAIProvider } from '@sdk-vercel/ai';

const openAI = new OpenAIProvider({ apiKey: 'your-openai-key' });

const anthropic = new AnthropicProvider({ apiKey: 'your-anthropic-key' });

const vertexAI = new VertexAIProvider({ apiKey: 'your-vertexai-key' });

async function generateText(prompt, provider) {

const response = await provider.generateText(prompt);

return response.text;

}

// Now you can easily switch between providers

const openAIResponse = await generateText("Explain quantum computing", openAI);

const anthropicResponse = await generateText("Explain quantum computing", anthropic);

const vertexAIResponse = await generateText("Explain quantum computing", vertexAI);

We've covered the major AI APIs available in 2025. Each has its own set of features, pricing models, and integration considerations.

When choosing an API, consider the specific functionality you need, latency requirements, pricing structure, integration complexity, and data privacy concerns.

That's it. The rest is up to you and your code.

For further related AI tooling articles, check out: