AI assistants have entered their intern era.

They'll ace any single task you throw at them—analyzing PDFs, generating SQL, cracking dad jokes about Kubernetes—but ask them to coordinate across Slack, Gmail, and Jira? Now you're debugging a Rube Goldberg machine of API keys.

Anthropic's Model Context Protocol (MCP) aims to standardize this mess. For users, it means connecting AI models to your Figma file and Linear tickets without needing a CS degree. For developers, it means fewer "why is the model just returning goat emojis" moments.

Let's look at MCP and its implications for the future of AI integration. First, we'll need to understand the problem it solves.

Intelligent tool use transforms "that's neat, bro" into agentic assistants, where AI models integrate with your apps and services.

In AI terms, tools are code modules that let Large Language Models (LLMs) interact with the outside world—API wrappers that expose JSON or function parameters to the model.

We've got great tools in the ecosystem, but wiring them up makes IKEA instructions look intuitive. Why does this still feel janky in 2025?

Every API endpoint (get_unread_messages, search_messages, mark_as_read) becomes its own tool requiring detailed documentation. Models must memorize when to call tools, which endpoint to use, and each endpoint's JSON schemas, auth headers, pagination, and errors.

If a user asks, "Did Mohab email about the Q4 report?" the AI must:

- Recognize that this is an email query (not Slack/docs/etc.)

- Select the

search_messagesendpoint - Format the JSON with

sender:"mohab@company.com"andcontains:"Q4 report" - Handle pagination if there are many results

- Correctly parse the response

The challenge is that LLMs have limited context windows. Stuff all this info into system prompts, and even if the model remembers it all, you risk overfitting—making the model a rigid recipe-follower rather than an adaptable problem-solver.

Even simple API tasks demand multiple endpoints. Want to update a CRM contact?

- Call

get_contact_id - Fetch current data with

read_contact - Update via

patch_content

This isn't a problem with deterministic code; you can write the abstracted functionality once, and you're good to go. But with LLMs, each step risks hallucinated parameters or misrouted calls.

APIs change. New endpoints get developed. OAuth flows update. With every service upgrade, current setups risk breaking your AI agent's "muscle memory."

Swapping Claude for Gemini Flash? Have fun rewriting all your tool descriptions. Today's integrations bake API specifics into tailored model prompts, making upgrades painful.

The web won by standardizing HTTP verbs (GET/POST/PUT/DELETE). AI tooling needs a universal protocol that lets models focus on what to do, not how to do it through endless API specifics.

Without standardization, maintaining reliable AI agents at scale isn't feasible.

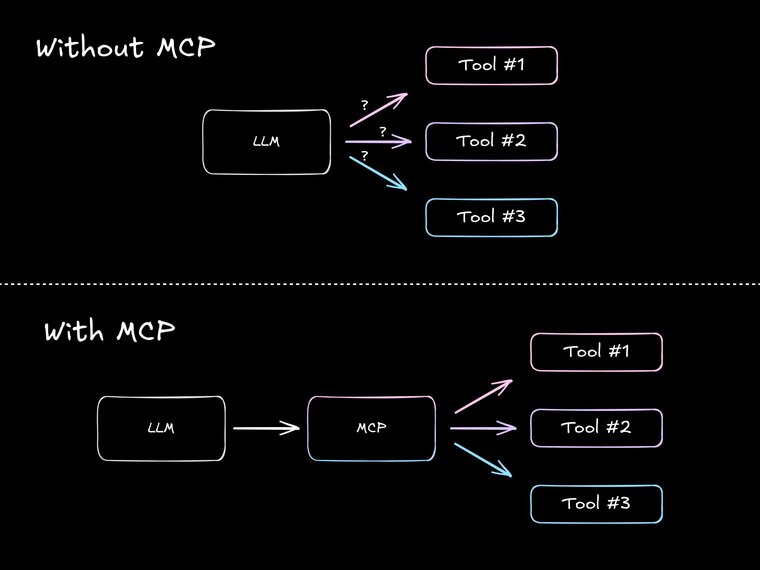

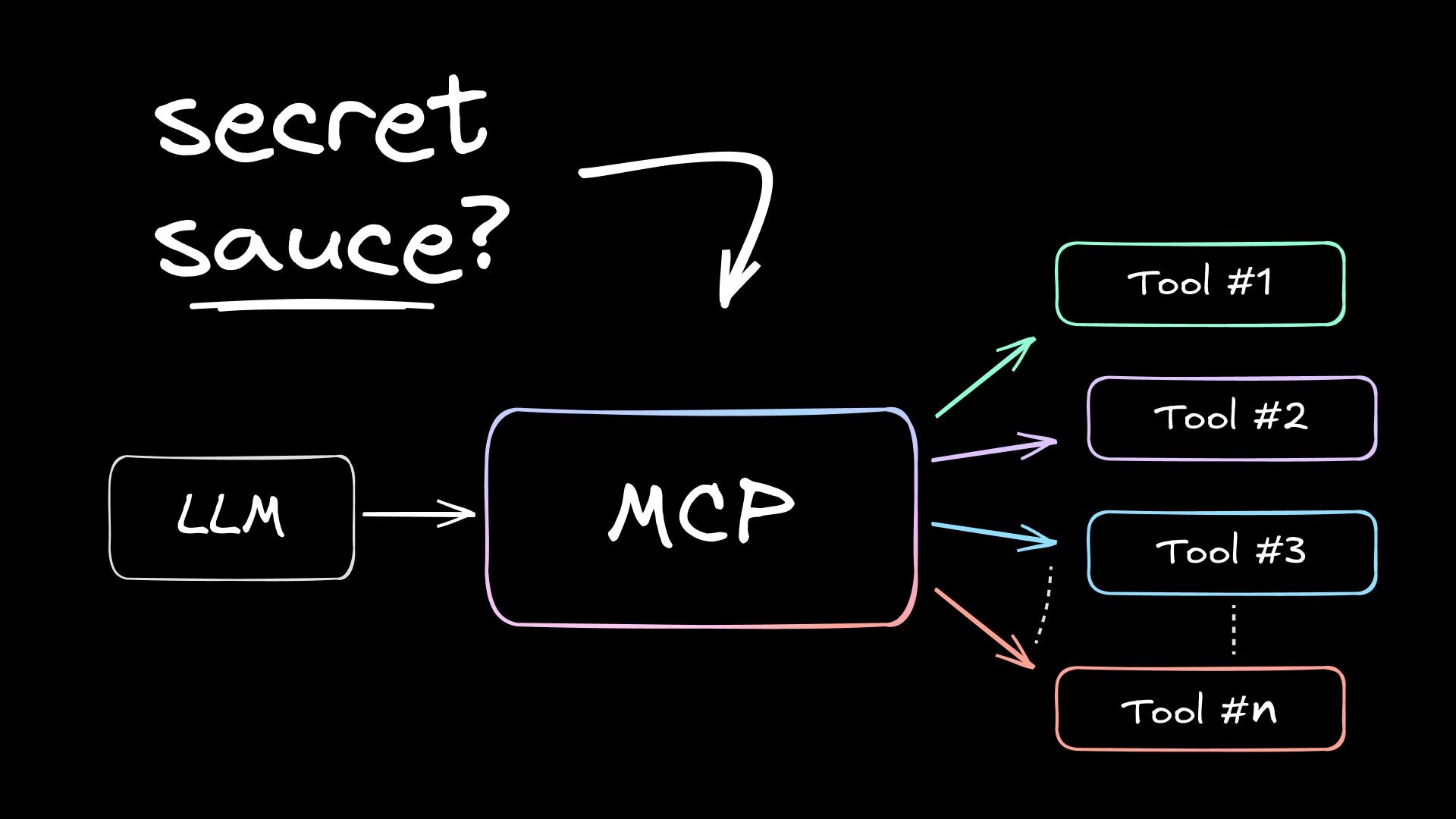

MCP is the first large-scale attempt to fix the tangled mess of tool integration. Released in November 2024 by Anthropic, the spec acts as a much-needed abstraction between AI models and external services.

Here's how it standardizes connections without limiting capabilities:

Instead of forcing AI models to juggle countless API schemas, MCP introduces a standardized "tool directory"—almost like an App Store for the model to browse.

Services describe what they can do ("send Slack message", "create Linear ticket") and how to do it (parameters, auth methods) using a consistent JSON-RPC format.

Models can dynamically discover available tools, freeing the AI to focus on when and why to use tools, instead of getting lost in syntax.

Tools consume precious model context window real estate. MCP helps slim down the system prompt by:

- Standardizing parameter formats (no more "is it

userIDoruser_id?") - Simplifying error handling across tools

- Using versioned API descriptions that are compact and easier for models to understand through natural language

MCP is like the API boundary between your frontend and backend. It creates a clear separation between the AI model (the thinker) and the external tools (the doers).

The beauty here is that your fancy AI agent doesn't break every time Slack tweaks its API. The MCP layer acts as a buffer, translating changes so the model doesn't need constant retraining or prompt overhauls. Services can update, models can get smarter, and things (mostly) keep working.

Handing over raw API keys to a nondeterministic LLM feels bad. MCP brings in some much-needed security by standardizing authorization using an OAuth 2.0 flow. This allows for fine-grained permission scoping (read-only, write-only) on all MCP capabilities.

These scopes act as guardrails. They prevent your brainstorming GPT from having the "bright idea" to delete your customer database. It's about enforcing the principle of least privilege, which is crucial for unpredictable systems.

Overall, MCP transforms tool integration from "Here are 15 tools with complex schemas to memorize" to "Here's a standardized protocol to discover and use available tools." This is a much-needed shift for democratizing reliable AI agents at scale.

So, how does MCP translate its goals into reality? Let's look at the main roles (clients, servers, and service providers) and capabilities (tools, resources, and prompts) to understand how everything interacts.

The MCP ecosystem boils down to three main players:

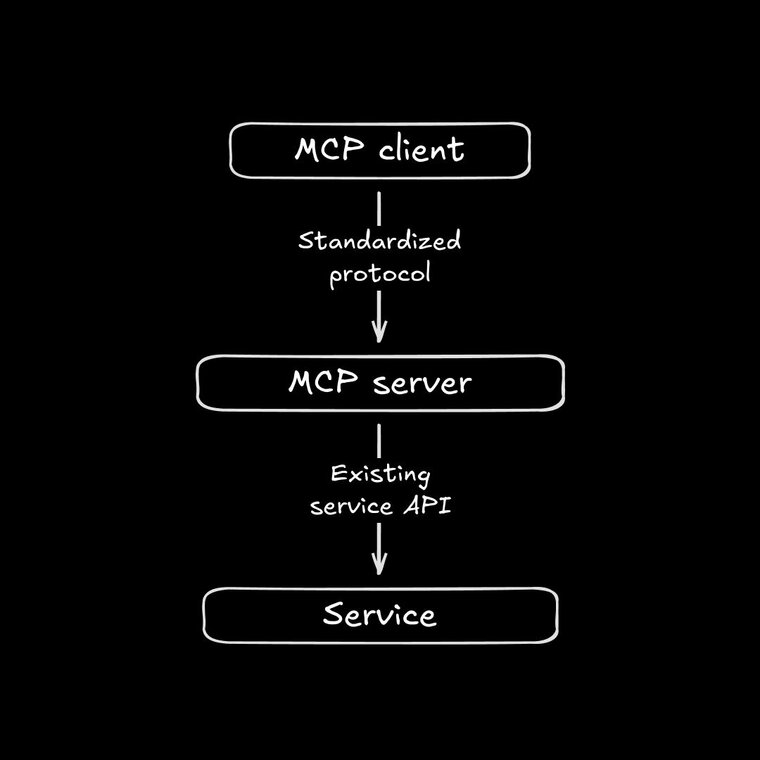

Clients are the apps you actually use, like Cursor, Windsurf, Cline, Asari, or the Claude Desktop app. They handle communication between the system’s frontends: the user, the AI model, and the MCP server.

First, they ask the MCP server to list available capabilities. Then, they show these options to the AI model along with your request (i.e., “Summarize my unread emails”). When the AI chooses to use a tool and outputs the correct parameters, the client handles the actual button-pushing with the server and brings back the results.

Clients also give the AI model a quick “MCP 101” so it knows the basic rules of engagement.

Servers are the middlemen, the universal adapters between users/AI and the service providers. MCP servers expose capabilities through a standardized JSON-RPC interface, making them discoverable and accessible to any compatible client.

They describe their tools using both natural language and structured formats, handle auth handshakes, and ensure everyone speaks the same MCP language.

Service providers are the platforms doing the work—Slack, Notion, GitHub, your company database, etc. They don't need to change their existing APIs; it's the MCP servers' job to adapt.

This architecture empowers service providers and the dev community. Anyone can create an MCP server to plug any service with an API into any MCP-compatible client, instead of waiting for the big LLM providers to build every tool integration.

While native model support (like Claude's) is ideal, the protocol aims for model agnosticism, relying on the client to bridge communication with different LLMs.

We understand that MCP helps models use tools—actions like create_task or search_emails that let AI interact with other systems. That's the bread and butter of MCP.

However, MCP servers can also expose resources and prompts.

Resources unlock continuous AI experiences. Think persistent data—files, database entries, knowledge bases—stuff the AI can read and write to across sessions. No more goldfish brain forgetting everything the moment the chat window closes.

These resources, defined via MCP with unique URIs (like file://home/user/documents/coding-prefs.json), can be your project notes, user preferences, coding style, team wikis, you name it. Plus, multiple people (or AI assistants) could collaborate on the same resource, enabling better agentic flows.

How is this different from existing AI memory systems like in ChatGPT or Windsurf? Again, MCP standardizes good practices to make AI models into AI agents. Resources aren't a new concept; it's just that with MCP, clients now only have to show models how to use resources once, and the models can read from and write to any of them.

All that said, resources (and prompts) are currently not implemented in the vast majority of MCP Clients. Even Claude Desktop's support for them is limited, not supporting dynamic discovery from the model.

Tools let the AI do stuff, but what if you need to guide the AI on how to behave while doing it? That's where MCP prompts come in to handle the nuance. Think of them as instruction manuals or style guides the AI can access on demand.

MCP bakes this into the protocol because sometimes, knowing a tool's function isn't enough. Prompts can ensure the AI adopts your company's quirky brand voice—or that it follows a specific safety checklist before hitting that delete_everything button.

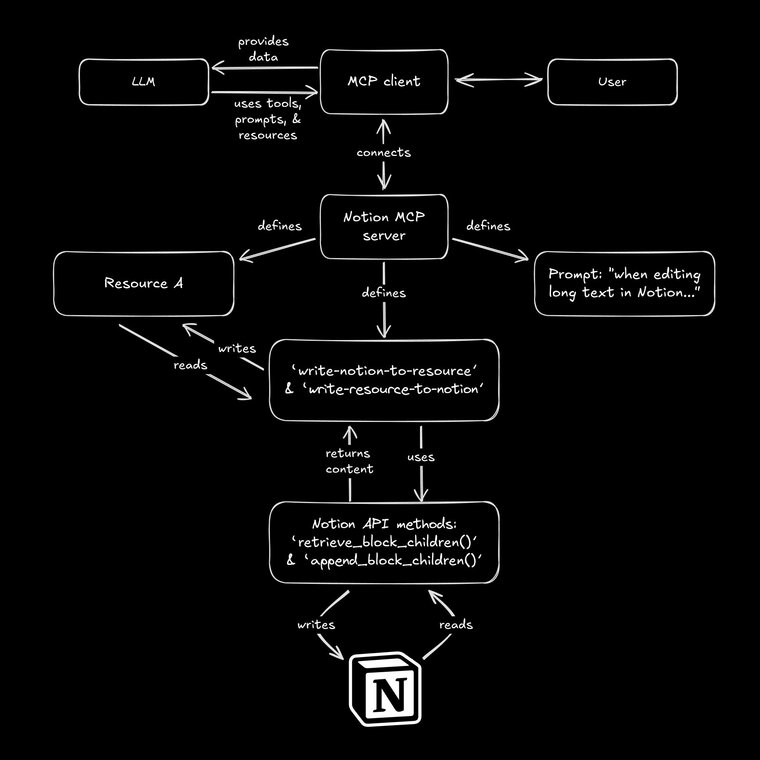

Let's look at a real-world example (albeit one that's totally not possible with the state of MCP clients today):

There are some cool Notion MCP servers, but Notion's API can be... chatty. Every "block" has its own pile of metadata. If you ask an AI model to edit a blog post directly in Notion via MCP tools, it often gets lost, struggling to separate the actual content from the API noise.

This is where prompts and resources could tag-team. An MCP prompt could guide the AI: "When editing long text in Notion, focus only on the content field. Use the write-notion-to-resource tool to dump the text into a temporary resource (Resource A), edit it there, and then use the write-resource-to-notion tool to update the original."

It's a mini-workflow, taught on the fly via a standardized mechanism, combining guidance (prompt), action (tool), and state (resource).

Prompts can be detailed because MCP saves context window space elsewhere. And just like tools and resources, prompts are dynamically discoverable. Including a library of 150 prompts in your MCP server won't overwhelm the AI, because prompts are defined like tools; the AI can be directed (by the client or its own reasoning) to reference a specific prompt when needed.

So how does the AI find the MCP server's cool stuff?

When a client app (like Claude Desktop or Cursor) connects to an MCP server, it asks, "What can you do?" The server responds with a list of its available tools, resources, and prompts, all defined in a standard way.

Then, the client manages the back-and-forth: presenting relevant tools to the model based on the user query, invoking the chosen tool via the server upon the model's request, and handling OAuth scopes to avoid the classic, "um, Claude just dropped our production DB.”

In other words, everything rides on the client's implementation. As more and more adoption of MCP's various capabilities occurs, we'll get better capabilities for models.

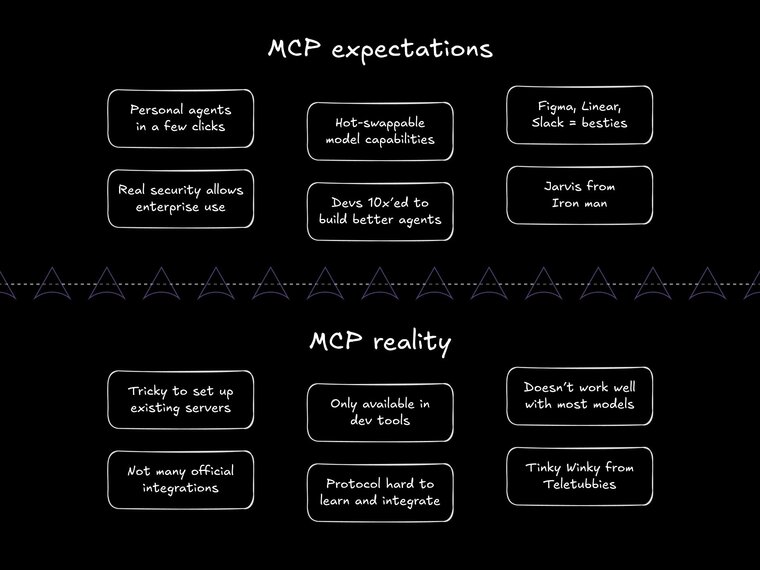

Right now, there's a lot of hype around MCP, and a decent chunk of it is merited.

Kinda like how OpenRouter lets you swap AI models without rewriting everything, MCP aims to do the same for tools, resources, and prompts. It's a standard interface, freeing you from lock-in and letting you tap into any MCP server's capabilities on the fly.

In an ideal world, this would mean that you can go write your own MCP servers (or collect your favorites) and then easily use them with any model on any provider, essentially creating personalized, useful agents from any LLM.

However, a lot remains to be done. MCP is a first attempt at standardization, and it's not widely adopted. So, knowing that, let's look at both the potential and the current blockers.

- Enterprise AI gets real (and secure): Companies can build internal MCP servers to connect their proprietary data and internal APIs to AI models safely. MCP offers a standardized path to secure, compliant AI agents doing real work inside the firewall.

- AI superpowers for everyone: Forget fiddly API scripts. Imagine your team building slick automations, connecting apps like digital LEGOs. Think AI auto-summarizing meeting notes into your project tracker or proactively drafting social posts from article drafts. Real workflow magic, no PhD required.

- Hello, MCP App Stores: To enable the above? Picture marketplaces with specialized MCP servers. Need AI for bioinformatics? Real estate analysis? Game development? There's a server (or ten) ready to plug in. This sparks an ecosystem, letting developers monetize niche expertise.

- Smarter tools: With a standard protocol, we stop reinventing the wheel for every integration. Developers can build on top of MCP, creating robust, AI-friendly tools that work with all the best LLMs. Fewer late nights debugging weird API responses and more time building cool stuff.

- Unified command center: Finally—one AI assistant that sees your calendar, inbox, tasks, and docs. It's the context-switching killer we've needed. Imagine Figma design systems with automated accessibility checks, or research papers that validate code before publishing. When tools speak the same language, with AI as a mediator, possibilities get wild.

…but we're still a way away from that.

- Still needs devs: Setting up MCP isn't drag-and-drop yet. While tools like Cline are smoothing the path, you still need developer skills to get things running. We're not at the point where your grandpa can wire up Thunderbird to an LLM to automate chain mail (unless he codes, in which case, rock on).

- Lack of official servers: Right now, the MCP server landscape is mostly community-driven. Major SaaS players haven't jumped in with official, blessed servers for their APIs. This means relying on third-party builds, which may not have full feature parity. Also, there’s no verification system for MCP servers—it's the wild west in terms of what data and capabilities you connect your AI to (even though the code is open source).

- Client adoption is still in the early days: While cool apps like Cursor, Claude Desktop, and Windsurf are onboard, MCP isn't in most AI interfaces. Even in existing MCP clients, there's almost no support for resources and prompts. MCP needs wider adoption across more clients to hit critical mass, like how file upload capability is just expected now.

- The protocol itself isn't perfect: MCP's stateful design forces you to use long-running servers, which creates friction with the world of serverless, simple REST, and standard HTTP infrastructure like caches/CDNs. Plus, the docs don't yet provide a lot of conceptual examples, so it can be hard for devs to build the right mental model.

- Limited model support: While Claude handles MCP natively, and OpenAI has announced forthcoming support, other models need bridges to work with the protocol. Clients can ease this with good implementations, but nothing beats training it into the model itself.

- "Autonomy" still needs air quotes: Current MCP implementations require a lot of human oversight. They don't enable fully autonomous AI systems to reliably plan and execute complex multi-step workflows without supervision.

MCP provides the possible blueprints, but developers are just starting to construct the standardized tool ecosystem.

All that said, if you're ready to tinker with MCP, here are resources to help you get started:

- Anthropic's MCP docs, for detailed reading.

- The official MCP repo on Github, where you can find reference, official, and community-built servers.

- Smithery, one of the fastest ways to get started using MCP servers, thanks to its per-server instructions for existing MCP clients.

- Vercel AI SDK 4.2, which has support for building MCP clients (tools only).

- Steve's MCP getting started video (for MCP users):

I'll also be writing a guide here soon on how to build your own MCP server. At the end of the day, I'm excited about the potential, and the more devs, the better for everyone.

Today's AI tooling feels like a box of tangled cords—Claude here, GPT there, but nothing really connected. MCP proposes a way to clean the clutter: Developers write one integration instead of ten, users get AI that actually connects their apps, and services stop drowning in "Why won't this work?!" tickets.

That said, it all only works if we plug in. If services adopt MCP and models play nice, we'll get AI that feels less like a circus act and more like a Swiss Army knife. We're not there yet—and MCP might not be the final standard—but for the first time, the path isn't duct tape and prayers. It's a real shot, like Anthropic's docs say, at USB-C for AI.

Builder.io visually edits code, uses your design system, and sends pull requests.

Builder.io visually edits code, uses your design system, and sends pull requests.

Connect a Repo

Connect a Repo