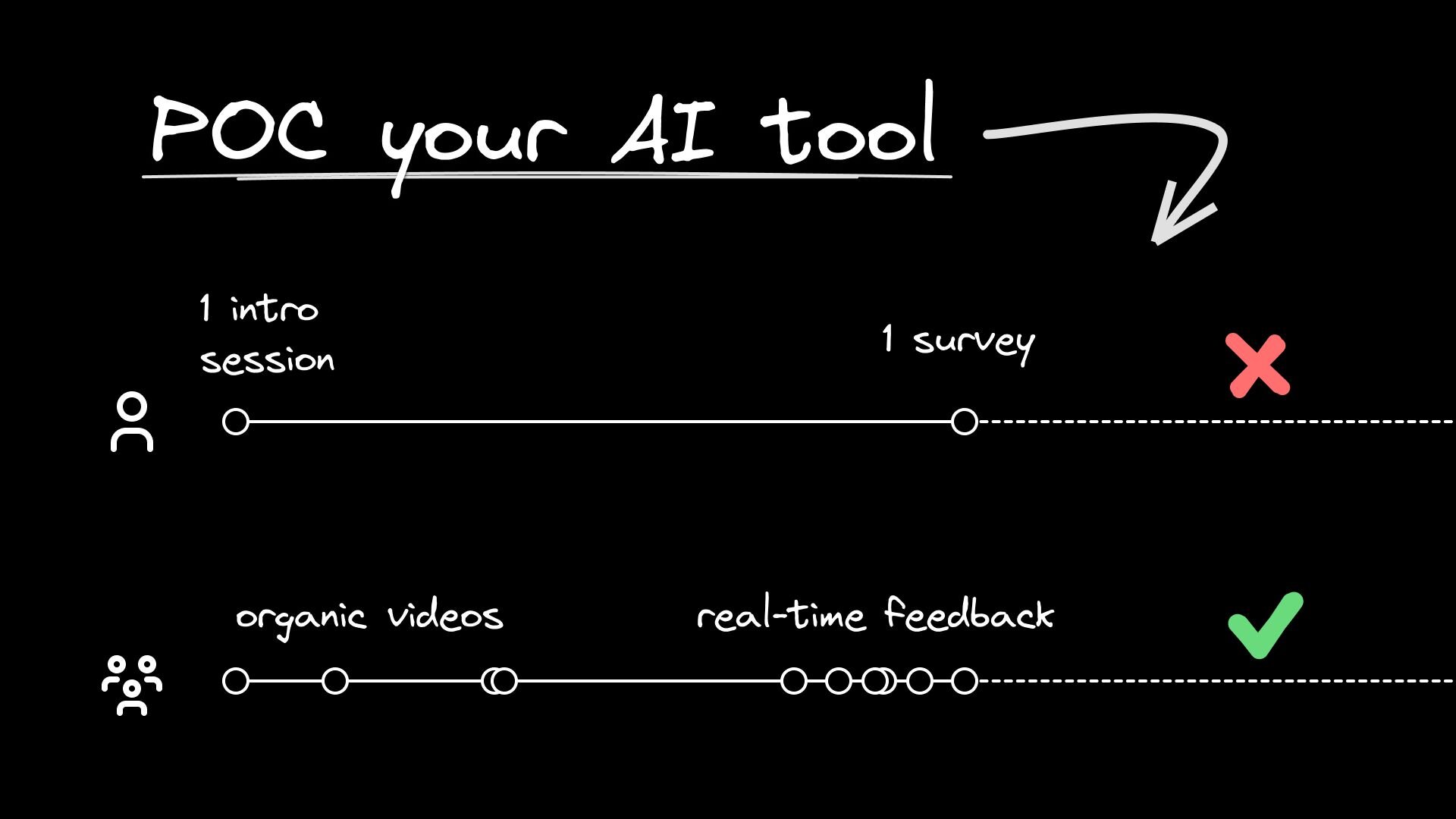

Most engineering teams evaluate new developer productivity tools the intuitive way: they start with senior engineers' opinions, rely on formal surveys and quantifiable metrics, and trust that a handful of formal training sessions will get the job done.

But, does that work in the world of evaluating AI coding tools?

We interviewed a few engineering leaders focused on developer productivity and compiled some surprisingly counter-intuitive tips:

You're conducting an evaluation, so the natural tendency is to tap your most experienced engineers. You trust them with your biggest problems, believe they'll be able to suss out good vs. bad code, easily figure out how to think in "systems" to optimize their workflow, and be strong voices who can evangelize to the rest of the team.

Here's the thing, engineering leaders who've gone through an AI coding tool evaluation say junior developers can often pull better results out of AI coding solutions. One engineering manager put it perfectly:

"We shared videos comparing senior vs junior engineer approaches. Actually the junior engineer won in a lot of cases. The junior engineer won because the code was a lot simpler. The senior engineer would overthink it, but the junior engineer was just like, 'oh yeah, you just use one function and it's easy.'"

There's some evidence to support this. Research consistently shows that junior developers get 2-3x better results from AI tools than senior engineers. MIT, Princeton, and Microsoft's study of 4,800+ developers found:

- Junior developers: 21-40% productivity improvements

- Senior developers: 7-16% productivity improvements

If you're maximizing your POC for the biggest opportunities, starting with junior developers might be the right move.

Week 1-2: Junior Developer Cohort

- Select 3-5 junior/mid-level developers

- Give them real tasks

- Track both quantitative metrics (tasks completed, time to completion) and qualitative feedback

Week 3-4: Senior Developer Comparison

- Have senior developers complete similar tasks

- Compare not just speed, but code quality and developer satisfaction

- Document the differences in approach and results

Week 5-6: Mixed Team Evaluation

- Form teams with both junior and senior developers

- Observe how they collaborate with AI tools

- Identify who becomes the "AI champion" naturally (it's usually not who you expect)

- Task completion rates (juniors often complete more tasks successfully)

- Code simplicity (AI-generated code tends to be simpler, which juniors embrace)

- Learning velocity (how quickly each group adapts to the tool)

- Continued usage after the pilot ends (juniors have higher retention rates)

The developer productivity leaders we spoke with measured their AI coding proof-of-concepts by measuring accepted lines of code generated, number of accepted PRs with AI-generated code, and pace of delivery for AI-enabled vs. non-AI-enabled squads.

Those are all great metrics and they're what we've seen teams POCing Builder.io use as well. Where engineering leaders struggled was getting meaningful qualitative feedback to share success stories, best practices, and surface issues they found during the POC to see if they can be fixed and optimized.

As many are familiar with, getting engineers to answer surveys can be like pulling teeth. But, nearly every engineering team we spoke to has engineers living and breathing in Slack. One engineering leader shared their insight:

"I created a Slackbot where people can just /{tool name} about things they're experiencing. I can look over a 2-3 week period and see how things are going, and bring them into my business case, decide if we should address them with the vendor, or move on from the POC."

Set Up Real-Time Channels:

- Create a dedicated Slack channel for AI tool feedback

- Use simple emoji reactions for quick sentiment tracking

- Implement slash commands for immediate reporting

Weekly Temperature Checks:

- 5-minute standup questions: "What AI tool frustrations did you hit this week?"

- Observe actual usage during code reviews and pair programming

- Track when people stop using the tool (and ask why)

Contextual Feedback Collection:

- Embed feedback prompts directly in the development workflow

- Capture feedback when someone rejects AI suggestions repeatedly

- Ask specific questions: "What would make this suggestion useful?"

Informal channels reveal insights formal surveys miss:

- Specific stories that support a business case

- Specific issues to address with the vendor

- How the tool is being leveraged and by whom

Most organizations default to formal training sessions, documentation, and structured onboarding for new tools. While these have their place, engineering leaders consistently found that organic, unpolished video content drives adoption far better than any formal training program.

One engineering manager explained their approach:

"I picked two engineers and they'd sit in code for an hour-long video. But they would just build something with AI. You play it at 2x speed and it's really interesting to watch and learn from because it's so real."

Authenticity Over Polish: Developers can spot marketing content from a mile away. When they see a respected peer genuinely struggling with prompts, making mistakes, and iterating in real-time, it builds trust and shows realistic expectations.

Real Problem-Solving: Unlike demos that showcase perfect scenarios, organic videos show how the tool performs on actual work. Developers see the messy reality: when AI suggestions are great, when they're terrible, and how to adapt.

Peer Learning: When an engineer is learning to prompt effectively on camera, it gives everyone else permission to experiment, make mistakes, and share learnings. It normalizes the learning curve.

The Recording Setup:

- Pick 2-3 engineers

- Give them a real task they need to complete anyway

- Record 1-2 hour sessions of actual work

- Don't script it—let them discover and struggle naturally

Content Strategy:

- Share clips at 1.2x speed for easier consumption

- Include both successes and failures

- Show different prompting techniques organically discovered

- Capture the "aha moments" when things click

Distribution and Follow-up:

- Share in team channels, not formal training sessions

- Include context: "Here's Sarah building the user service refactor with AI"

- Follow up with prompting tips that emerged naturally

- Create a library of real use cases over time

Unexpected Champions: Often the engineers who become AI evangelists aren't your most senior people. They're the ones who approach problems differently and aren't constrained by "the way we've always done things."

Natural Prompting Evolution: You'll see prompting techniques evolve organically. Junior engineers might discover simpler approaches that work better than complex prompts senior engineers craft.

Realistic Expectations: Teams develop appropriate expectations about when AI helps and when it doesn't, leading to more sustainable adoption.

The most successful AI tool evaluations we observed treated the introduction of AI as a developer experience initiative, not just a productivity play. This means:

Focus on Developer Satisfaction: Happy developers who feel empowered by tools are more productive than developers forced to use tools that don't fit their workflow.

Measure Adoption, Not Just Usage: Usage metrics can be gamed. Adoption means developers choose to use the tool when they don't have to.

Iterate Based on Feedback: The best implementations evolved based on how developers actually used the tools, not how the vendor intended them to be used.

Think Long-Term: The goal isn't just to prove ROI for this quarter, but to build sustainable practices that improve over time.

One engineering leader summed it up perfectly:

"I don't want developers going off to some separate tool and being distracted. I want to bring the AI to them, where they already are; in their IDE, in Slack, in their workflow."

The companies that got this right didn't just evaluate AI tools, they used the evaluation process to better understand their developers' needs and improve their overall development experience. The AI tool became a catalyst for broader improvements in how teams work together.

While you're evaluating AI coding tools, you might want to check out Fusion: it's an AI tool that gets the developer experience memo. Fusion plugs directly into your existing codebase. Developers, product managers and designers use it to generate on-brand web features without massive SDLC timelines.

Changes ship as pull requests to your codebase, so engineers don't need to leave their preferred git-based flow: they can quickly review a PR, approve it and get to the next feature.

Try Fusion today to see how the fastest moving teams are shipping features with 80% fewer resources.